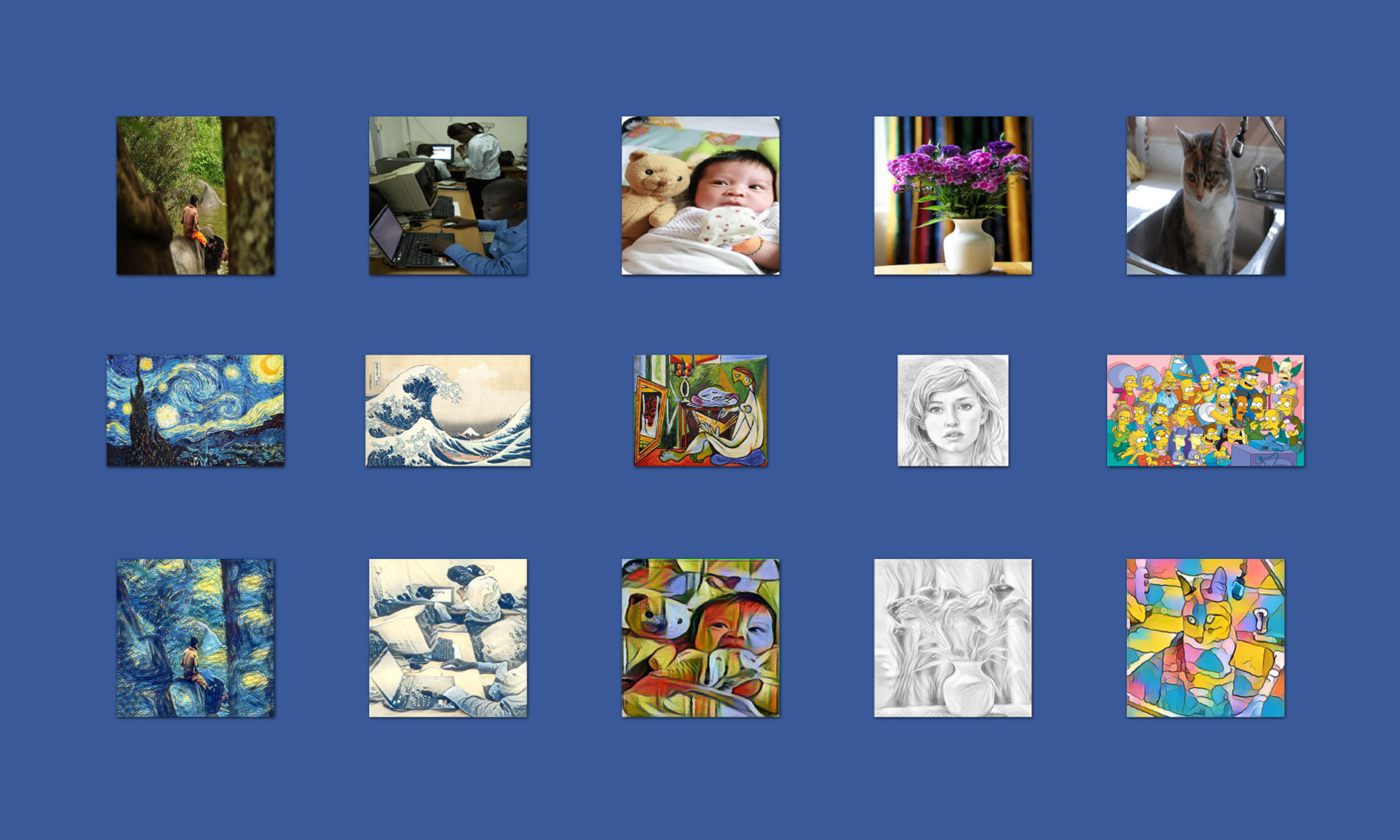

As video becomes an even more popular way for people to communicate, we want to give everyone state-of-the art creative tools to help you express yourself. We recently began testing a new creative-effect camera in the Facebook app that helps people turn videos into works of art in the moment. That technique is called “style transfer.” It takes the artistic qualities of one image style, like the way Van Gogh paintings look, and applies it to other images and videos. It's a technically difficult trick to pull off, normally requiring the content to be sent off to data centers for processing on big-compute servers — until now. We've developed a new deep learning platform on mobile so it can — for the first time — capture, analyze, and process pixels in real time, putting state-of-the-art technology in the palm of your hand. This is a full-fledged deep learning system called Caffe2Go, and the framework is now embedded into our mobile apps. By condensing the size of the AI model used to process images and videos by 100x, we're able to run various deep neural networks with high efficiency on both iOS and Android. Ultimately, we were able to provide AI inference on some mobile phones at less than 1/20th of a second, essentially 50 ms — a human eye blink happens at 1/3rd of a second or 300 ms.

The style-transfer tool in the camera is the result of a marriage between two technologies: the Caffe2go runtime and style-transfer models. Because our AI teams deal with both algorithms and large-scale systems, they were well suited to develop new models for both pursuits, making the style-transfer experience high-quality and fast. It took both technologies to make it possible for you to feel like you have Van Gogh's paintbrush in your hand when you pick up your phone to shoot a video.

We started the work three months ago, setting out to do something nobody else had done: ship AI-based style transfer as a creative tool, but have it run live, in real time, on people's devices. A unique set of people across product, technology, and research groups jumped in on the project. Justin Johnson of the Facebook AI Research group was the author of one of the foundational research papers describing the technique, building off previous research in the field. Our Applied Machine Learning group had been working toward building an AI engine that would run on mobile devices. The Camera team had a clear understanding of the user needs. Along with the contribution of many others, these teams produced a best-in-class solution that runs highly optimized neural networks live on mobile devices. We'll explain how we thought about and developed the applicable technologies, starting with Caffe2go.

Caffe2Go

Lightweight and fast

Artificial intelligence has made a significant impact on computer science, but it's been mostly limited to big data centers that are miles or maybe hundreds of miles away from people using AI-powered services. So any AI processing something in “real time” still suffered from the latency impact of having to travel to a data center to run on a GPU. Since we didn't think it would be practical to ask people to walk around with a supercomputer, we wanted to figure out a way to make AI work on the CPU on the most ubiquitous device out there — the smart phone.

The phone can see, talk, and comprehend in real time without having to connect to remote servers, but they also have limitations. While they have improved significantly in computation power in recent years and are capable of carrying out billions of arithmetic computations every second, they also have various resource constraints like power, memory, and compute capability that require smart software design. As a result, mobile presents both an opportunity and challenge for machine learning systems.

Our solution to this challenge was to design a particularly lightweight and modular framework. To this end, we built on top of the open-source Caffe2 project, using the Unix philosophy. This explicitly ensures the core framework, which declares and connects the components, is very light and able to have multiple modules attached — including mobile-specific optimizations. We kept a lean algorithm framework that allows engineers to describe the abstract computation as a directed acyclic graph (DAG), but make sure that no constraints are imposed on what input and output such nodes in the graph can carry out. This allows our engineering teams to implement and optimize modules on different platforms, while being able to connect such modules with ease. When the graph is actually run, it instantiates itself with the various hardware features to achieve maximum speed.

As speed is at the core of computation-intensive mobile applications, especially images and videos, the lightweight design of the framework allows us to perform platform-specific optimizations for defined operators. A notable example is a library called NNPack that Caffe2 integrates in our mobile runtime. By utilizing a mobile CPU feature called NEON, we are able to significantly improve the mobile computation speed. On iOS devices, we have also embarked on integrating acceleration features such as the Metal language. All these are done with a modular design, with no need to change the general model definition. As a result, the algorithm side and the runtime side can safely rely on each other, and do not need to worry about any potential incompatibilities.

Developer-friendly

Caffe2 is also our first industrial-strength deep learning platform that ships at full speed on four platforms with the same set of code: server CPU, GPU, iOS, and Android. Because of the modular design, the framework can speak the same language but be optimized for each platform. It's an implementation detail that is hidden from the developer; for example, the framework chooses between NNPack for mobile (iOS and Android) or CUDNN for server GPUs. As a result, the algorithm developer can focus on the algorithm work and not on how to run the convolution.

Developers also benefit from a fast deployment design. From a developer's perspective, debugging mobile runtime can be a challenge, as the mobile toolchain is not as advanced as desktop and server counterparts. We addressed this by abstracting the neural network math from the hardware — a serialized network in Caffe2go can be carried out on both mobile phones and servers with the same numerical output. As a result, we can move a majority of work to a server environment — model training, performance inspection, user experience study — and after things look good, have a one-button deployment to the mobile environments.

Training the style transfer models

The idea of style transfer is not a new one. It was initially introduced in by researchers in a seminal paper, “A Neural Algorithm for Artistic Style,” that was published in August 2015. However, the technique was slow and required powerful servers. Over the next few months the research community advanced these techniques and increased the speed by a couple orders of magnitude, but was still using massive amounts of compute power on servers.

Caffe2go made the AI processing fast and put it in the palm of your hand. But the style transfer models needed to be optimized as well, ensuring the experience was real time, while maintaining high-quality, high-resolution image.

Optimizing for efficient model size

Models for traditional style-transfer work (even the feedforward variants) are big (in terms of the number of parameters) and slow. Our goal in creating the style-transfer application was to run new, smaller, more efficient models to deliver a high-quality video running at 20 FPS on iPhone6s or above that avoids dropping frames.

We applied three major approaches for reducing the model size. We optimized the number of convolution layers (the most time-consuming part of processing) and the width of each layer, and we adjusted the spatial resolution during processing. The number of convolutional layers and their width can be used as separate levers for adjusting processing time, by adjusting how many aspects of the image are getting processed, or adjusting the number of times a separate processing action has taken place. For spatial resolution, we can adjust the actual size of what is being processed in the middle layers. By using early pooling (downscaling the size of the image being processed) and late deconvolution (upscaling the image after processes), we can speed the processing time up because the system is not processing as much information. We also found with these techniques that we could aggressively cut down on the width and depth of the network while maintaining reasonable quality.

Improve the quality

Image quality is subjective and it's very difficult to measure — especially for things like style transfer. Therefore we built visualization tools, including A/B tests, and trained different models to ensure we were getting the best quality image results. Our large GPU cluster, powered by FBLearner Flow, allowed us to do this, as we could rapidly sweep over a large range of hyperparameters, such as model architecture, content/style weight, and downsampling, to find a well-trained feedforward style that hit our performance goals while keeping and improving the quality.

There are many other tricks that improve the quality — for example, applying instance normalization instead of the commonly used batch normalization helps in many styles, as do avoiding zero padding in the convolution layers reduce artifacts and applying different pre- and post-processing filters on style or content images. But in our testing we found that these approaches tend to work better for some styles and not as well on others.

With the speed and quality optimizations made in the style-transfer technology, run on the Caffe 2 framework, a real-time image processing system is possible on the mobile experience.

What's next

Caffe2go is core to the machine learning products at Facebook, together with research toolchains such as Torch. Because of its size, speed, and flexibility, we're rolling Caffe2go out across Facebook's stack.

We are also committed to sharing our software and designs with the community so we can learn to better utilize the characteristics of multiple hardware platforms and algorithm designs, which is particularly important in cross-platform machine learning systems. We will be looking to open source parts of this AI framework over the coming months.

As we move forward, you can imagine how having on-device AI running in real time could help make the world more open and connected for people in areas like accessibility, education, or others. The smart devices in our hands are going to continue disrupting the way we think about intelligence. With a swift, lightweight machine learning system like Caffe2go, we are committed to bringing you more awesome AI and AR experiences like getting access to Van Gogh's brush when you shoot a video.