At today’s Systems @Scale conference, we presented Delos, a fundamentally new architecture for building replicated storage systems. Its modular, layered design provides flexibility and simplicity without sacrificing performance or reliability. Delos enables fast time-to-deployment for new storage systems — we deployed an initial version in production within eight months — as well as safe, rapid evolution. We swapped in a new ordering mechanism to obtain 10x lower latency without any service downtime.

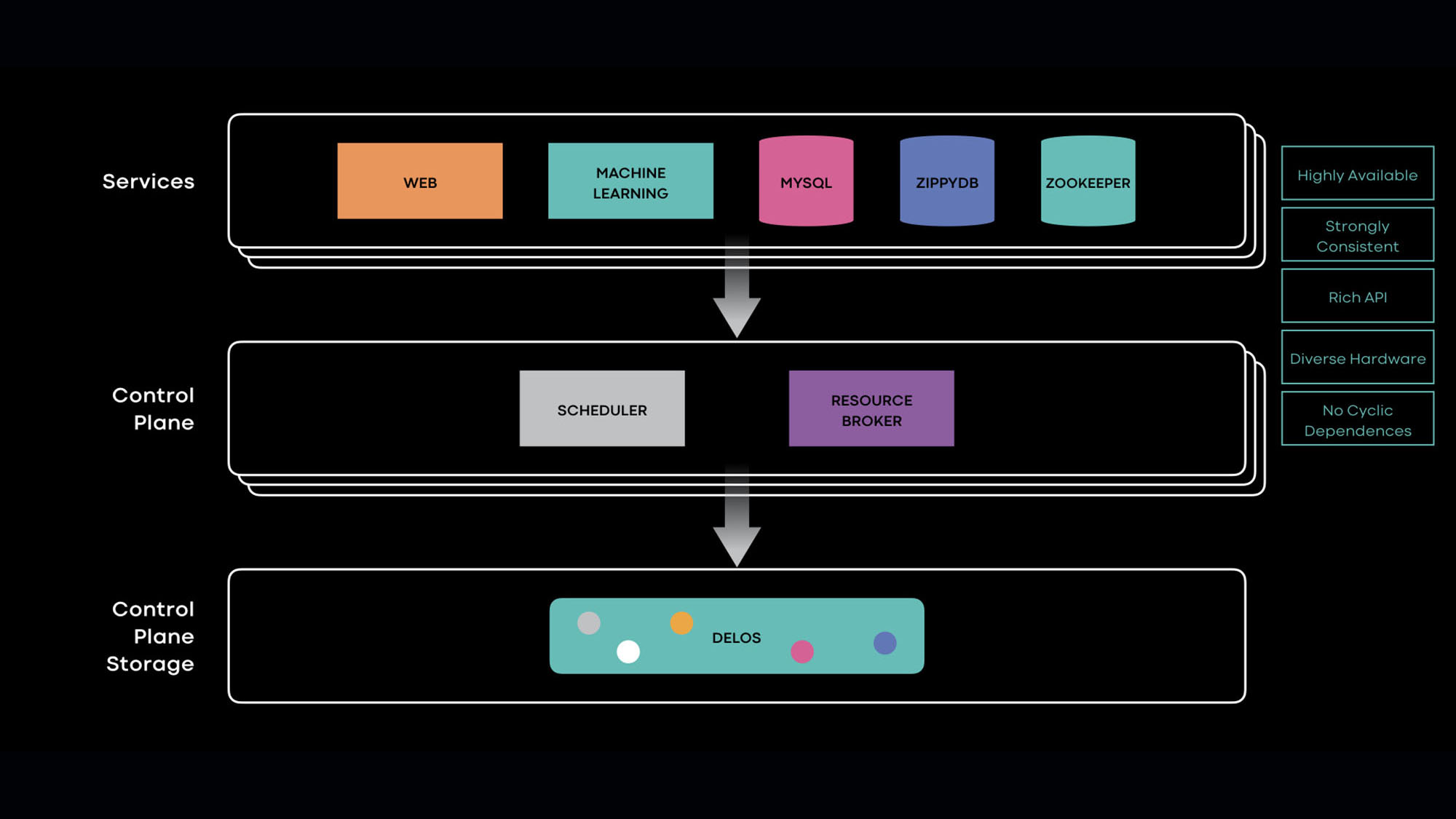

Delos is designed for control plane services, which are important services at the bottom of the Facebook technology stack. Such services require low-dependency storage: Relying on other services can lead to cascading failures and bootstrapping snarls. These services also exhibit diversity, as each one requires storage with a substantially different API (SQL queries, key-value pairs, hierarchical namespaces, queues, etc.) and varies in performance and reliability guarantees.

Existing storage systems are monolithic, providing a single API and fixed performance and reliability guarantees. Building and operating a single low-dependency storage system is challenging; doing so for every possible combination of API and performance and reliability requirements is a far greater challenge. Delos breaks this impasse via a single low-dependency system with a plug-and-play architecture that allows individual components to be easily modified, providing flexibility in two dimensions:

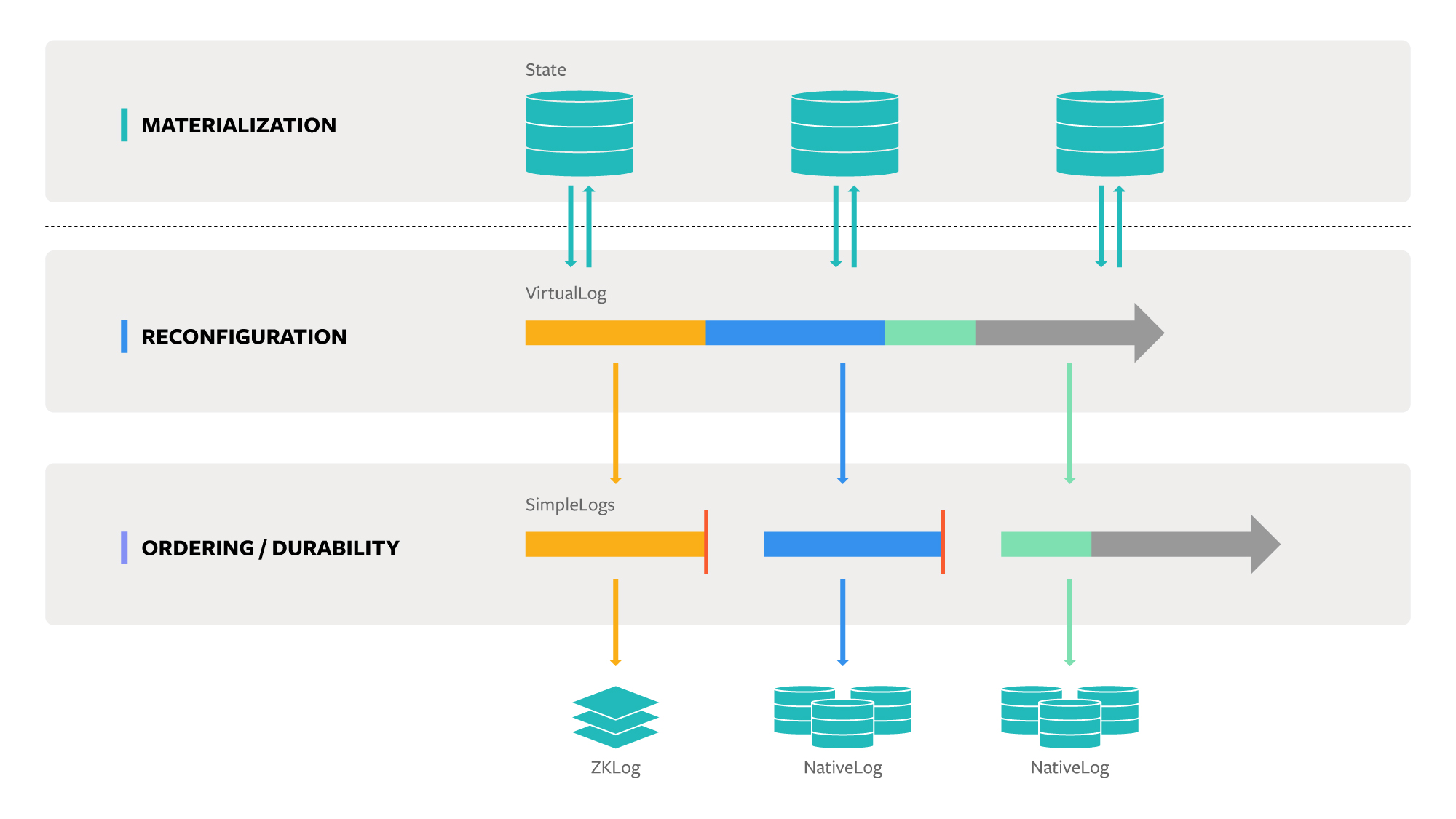

- Pluggable APIs: Delos separates API materialization from the ordering of updates via a shared log abstraction. New APIs can be implemented as thin application layers that order updates via the shared log.

- Pluggable ordering: Delos separates the ordering of updates from reconfiguration by splitting the shared log into a VirtualLog that dynamically stitches together underlying SimpleLogs. New performance and reliability properties can be obtained by hot-swapping new SimpleLog implementations.

Rethinking monolithic system design

When we set out to build a new storage system for the control plane, we knew we needed durability, strong consistency, and high availability. We also needed a rich and flexible API: Our first use cases required a relational model with support for transactions, secondary indices, and range queries, while subsequent use cases required other APIs, such as hierarchical namespaces and queues. In addition, each use case demanded different performance and reliability guarantees. Finally, we needed to incrementally develop and deploy the system over a staggered timeline: We needed to deploy the system within a few months because existing solutions were no longer meeting the needs of control plane services. Once that immediate need was met, we also needed to support the high transaction rates and low latency required by our most demanding control plane workloads and ensure zero dependencies on other services so that Delos can be brought up or restarted independently.

To achieve these goals, we considered developing multiple point solutions, each of which satisfied some specific combination of API, performance profile, fault tolerance, and deployment timeline. After examining the difficulty of tackling many systems at once, we instead considered the following question: Why are new replicated systems so challenging to develop and evolve? Today, such systems are monolithic; their designs mix the functionality for:

- Ordering commands consistently and durably.

- Materializing state by playing back commands.

- Reconfiguring the composition and role of individual servers in the distributed system.

As a result, existing systems are inflexible. Handling a new use case often requires systems to be rewritten from scratch. Our approach was to isolate each of these functions in pluggable components that could be swapped interchangeably to construct new distributed storage solutions and modify the behavior of existing solutions.

A layered design for replication

Delos is designed around the novel abstraction of a VirtualLog, a type of distributed shared log. Individual servers maintain consistency for local copies of state via the VirtualLog. To modify state, each server appends a new update to the VirtualLog. To access state with strong consistency, the server first synchronizes its local copy with the VirtualLog. This design separates materialization from ordering. The replicated state can take any form, ranging from a key-value store to a relational database. The VirtualLog abstraction provides the benefits of a shared log design, hiding the complexity of distributed coordination and allowing materialization logic to be oblivious to distributed asynchrony and failures.

The VirtualLog chains together multiple underlying shared logs called SimpleLogs. The VirtualLog switches dynamically between SimpleLogs to provide a reconfiguration capability that is generic and powerful, supporting changes to leadership, membership, protocol parameters, or even the entire protocol and codebase responsible for ordering and storing commands.

The separation of materialization, ordering, and reconfiguration into distinct layers provides two important benefits:

- Simpler replicated systems: The API-specific materialization logic above the VirtualLog is simple and compact, avoiding complex distributed protocols and relying on simple appends and reads on the VirtualLog. The VirtualLog itself provides a control plane for replication that is simple and fault tolerant, while supporting a data plane that is simple and fast (the SimpleLog). The SimpleLog can operate within a fixed membership with preassigned roles. It does not have to provide high availability for appends; for instance, it does not require fault-tolerant consensus. Instead, it simply provides a highly available seal operation, which is theoretically weaker than consensus and practically simpler to implement. It does not have to support any form of reconfiguration. When a SimpleLog fails for appends, the VirtualLog seals it and switches to a different SimpleLog, providing leader election and reconfiguration as a separate, reusable layer that can work with any underlying SimpleLog.

- Flexible replicated systems: New materializations above the VirtualLog can provide different abstractions for storage such as a relational model, a key-value store, or a file system API. The VirtualLog can dynamically switch between different SimpleLogs to obtain different performance and fault-tolerance properties. The SimpleLog can be disaggregated, running on an entirely different set of servers from the machines accessing the VirtualLog, or converged, running on the same set of servers. New mechanisms for ordering or durability can be incorporated easily as new SimpleLog implementations. The existence of discrete layers allows designers to make principled decisions around the placement of functionality: For example, leader election can be provided by the SimpleLog if it is more efficient to do so.

Moving fast with stable (storage) infra

We are leveraging the simplicity and flexibility of Delos to perform difficult tasks that otherwise might not be possible. For instance, we hit production within eight months for a Delos-based table store (DelosTable). Our first use case was Tupperware Resource Broker, which maintains a ledger of all machines in our data centers and their allocation status. DelosTable offers a rich API, with support for transactions, secondary indexes, and range queries. It provides strong guarantees on consistency, durability, and availability. Delos supports a pluggable API, so we were able to provide exactly the API that ResourceBroker needed. Since Delos also supports pluggable ordering, we implemented a SimpleLog as a pass-through layering over our existing ZooKeeper service (in effect, Delos uses ZooKeeper as its ordering mechanism in this mode). In both cases, modularity enabled us to make short-term decisions without sacrificing long-term potential; we knew we could change both the API and the ordering mechanism easily as application requirements shifted.

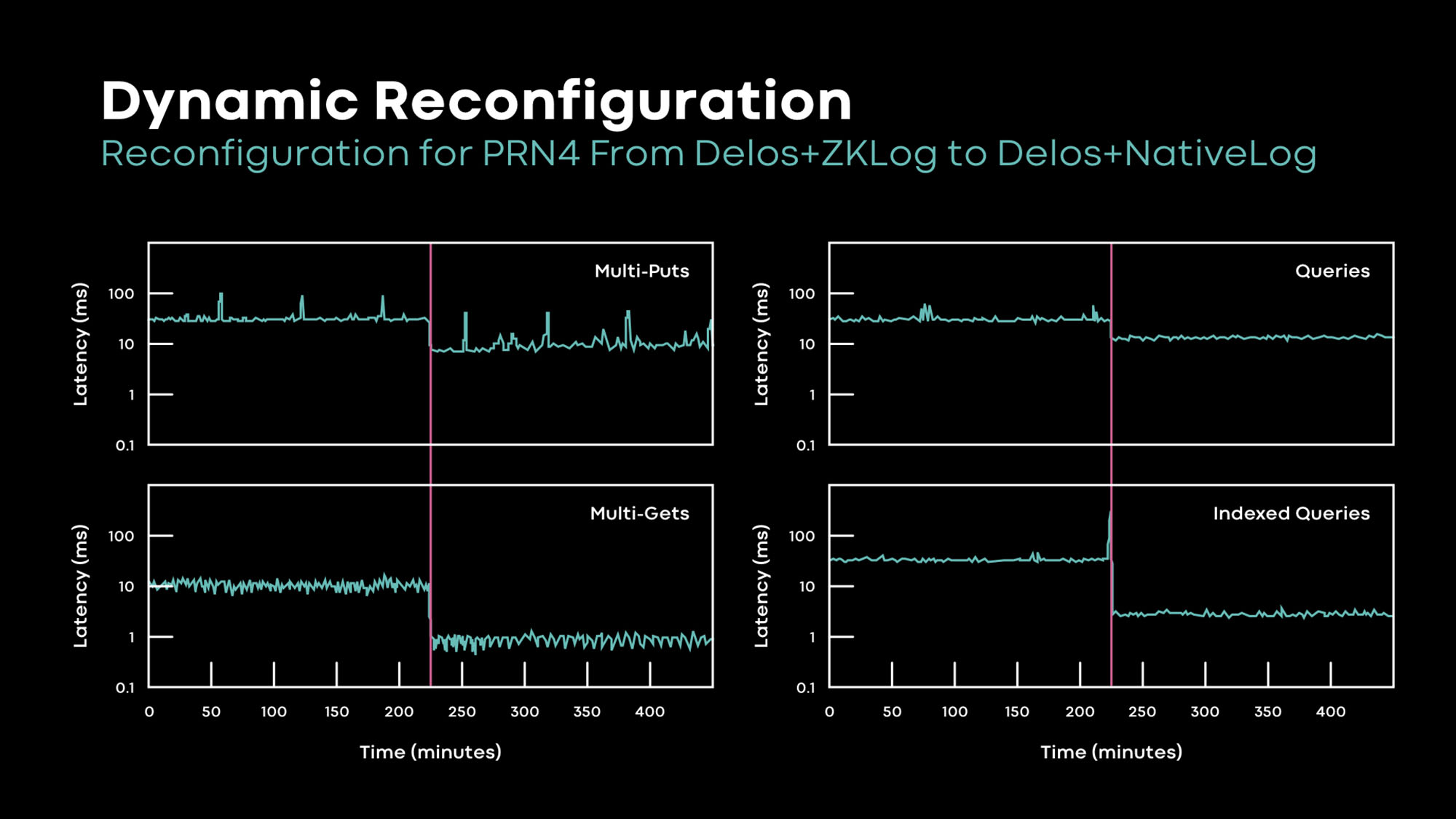

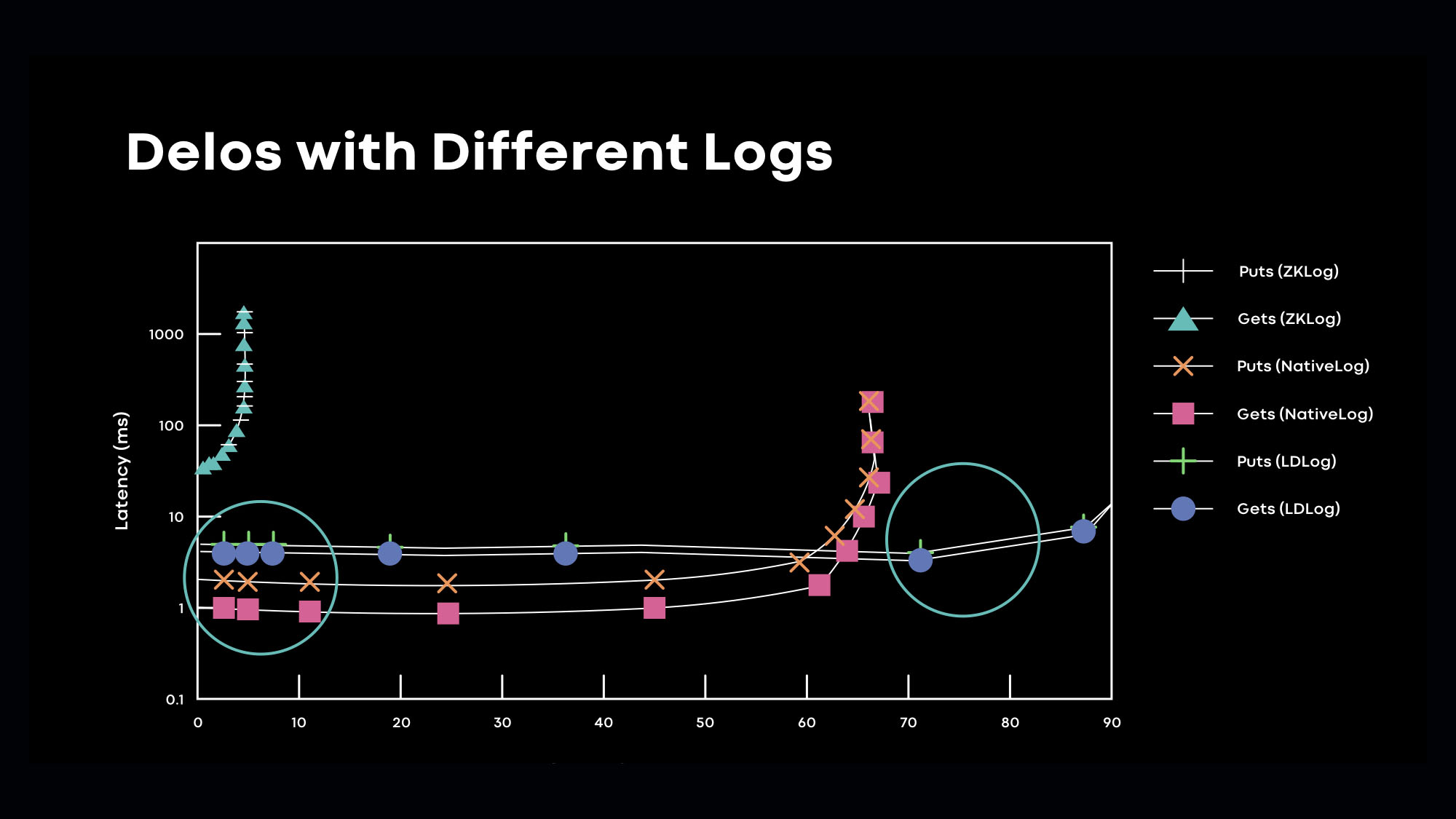

After just four additional months of development, we swapped in a new native SimpleLog without service downtime, lowering Delos latency by 10x for the ResourceBroker workload. The native SimpleLog is converged (i.e., the shared log and the materialized state reside on the same servers), eliminating our critical-path dependency on ZooKeeper. In the graph below, we show Delos switching from the ZooKeeper-based log to the native log on the fly in a production deployment. We are able to improve performance with almost no service disruption. Also, the performance improves for all types of queries (the y-axis is logarithmic).

New storage APIs can be realized via new materialization layers above the VirtualLog. We implemented an experimental materialization layer that provides a hierarchical ZooKeeper-like namespace but with more capacity. We also have an experimental distributed queue materialization layer. New SimpleLogs can provide different performance and reliability guarantees. We implemented an experimental disaggregated SimpleLog based on our open source LogDevice system. The graph below shows the power of this architecture. We dynamically switch between the disaggregated LogDevice ordering layer and the converged native log ordering layer based upon latency SLAs. We choose the converged implementation (which uses fewer resources and has no critical-path dependency) when a latency SLA can be satisfied, and we switch to the disaggregated implementation when the latency SLA is violated.

The flexibility of the Delos architecture allows us to quickly experiment with and incorporate new designs. New ordering layers can be substituted on the fly, changing the servers, protocol, codebase, and even the deployment model used for ordering updates. The resulting simplicity and flexibility enables fast, incremental development and deployment of new systems while allowing us to optimize for performance and reliability of deployed systems.