Open infrastructure technologies and networking hardware will play an important role as we build new technologies for the metaverse, where billions of people will someday come together in virtual spaces. As we head toward the next major computing platform with a continued spirit of embracing openness and disaggregation, we’re announcing two new milestones for our data centers: We’re sharing our next-generation network hardware portfolio in our data centers, developed in close partnership with multiple vendors. And in conjunction with this, we’ve migrated our data center network hardware to a standard and open API — the Open Compute Project (OCP) Switch Abstraction Interface (SAI).

We’ve come a long way in the decade since we first decided to design and build our own data centers. Even then, we knew they’d be based on concepts of openness and disaggregation, with technologies that are modular to make upgrading easy and efficient. Since founding OCP in 2009, we’ve shared our data center and component designs, and open-sourced our network orchestration software, to spark new ideas both in our own data centers and across the industry.

Today, those ideas have made Meta’s data centers among the most sustainable and efficient in the world. Now, through OCP, we’re bringing new open advanced network technologies to our data centers, and the wider industry, for emerging frontiers of computing — from advanced AI applications to the metaverse.

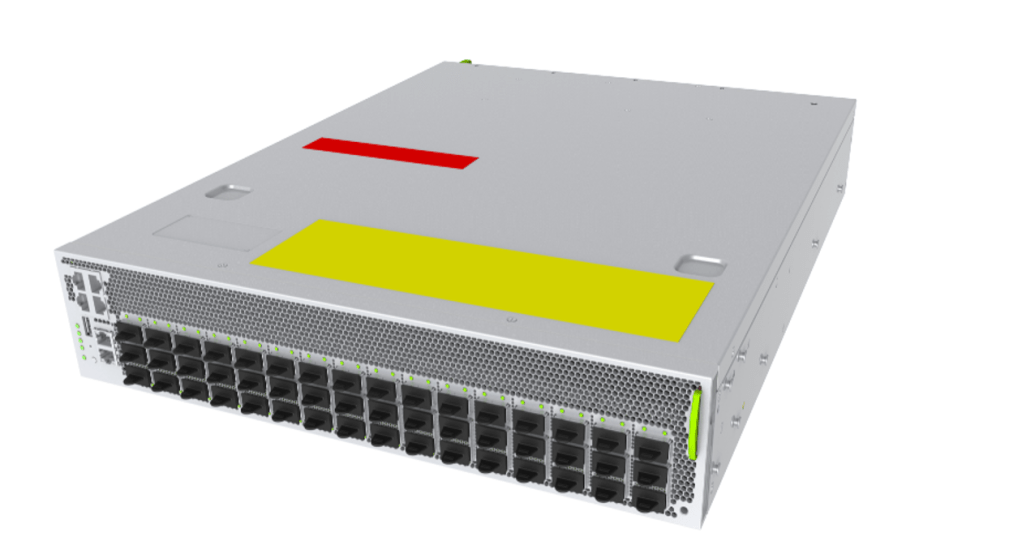

Wedge 400/400C: New TORs for more powerful open networks

We’ve partnered with Broadcom, our long-standing ASIC partner, and Cisco Systems, our newest ASIC partner, to use their ASICs in our two next-generation top-of-rack (TOR) switches — the Wedge 400 and 400C, the latest versions of our Wedge TOR. The Wedge 400 utilizes Broadcom’s Tomahawk 3 ASIC, while the 400C uses Cisco’s Silicon One — our first contribution using Cisco’s new chip. Both TORs offer higher front panel port density and greater performance for AI and machine learning applications, while also enabling future expansions.

The Wedge 400 and 400C have already been deployed in our data centers and boast several improvements over the Wedge 100S, including 4x the switching capacity (upgraded from 3.2 Tbps to 12.8 Tbps), 8x the burst absorption performance, and a field-replaceable CPU subsystem. Both the Wedge400 and 400C are manufactured by Celestica and are open platforms that developers of any size, from startups to large ISPs, can utilize for their own projects.

FBOSS is now powered by SAI

In the past, FBOSS, Meta’s own network operating system for controlling network switches, has utilized the specific API provided by the ASIC vendor. Now, with FBOSS being adapted to OCP SAI and deployed at scale in the Meta network, we can work with more silicon vendors. Broadcom has partnered closely on our migration of FBOSS from OpenNSA to SAI. In addition, we’ve worked with Cisco Systems to support FBOSS with SAI with their ASIC.

Adapting and migrating FBOSS to SAI means we can onboard multiple ASICS from multiple vendors more quickly and easily onboard new ones in the future. SAI’s API lets engineers configure new networking hardware without needing to delve into the specifics of the underlying chipset’s SDK. Furthermore, SAI has been extended to even the PHY layer, with Credo Semi supporting FBOSS with their own SAI implementation.

With this hardware being shared through OCP, supporting SAI also means closer collaboration with and feedback from the wider industry. Developers and engineers from all over the world can work with this open hardware and contribute their own software that they, in turn, can use themselves and share with the wider industry. It all goes toward our goal of creating a future where networking is both open and disaggregated.

Next-generation 200G and 400G fabrics

Meta’s data center fabrics have evolved from 100 Gbps to the next-generation 200 Gbps/400 Gbps. Meta has already deployed 200G-FR4 optics at scale and contributed to specifications for 400G-FR4 optics that will be deployed in the future.

Meta has developed two next-generation 200G fabric switches, the Minipack2 (the latest version of Minipack, Meta’s own modular network switch) and the Arista 7388X5, in partnership with Arista Networks. Both of which are also backward compatible with previous 100G switches and will support upgrades to 400G.

The Minipack2 is based on the Broadcom Tomahawk4 25.6T switch ASIC and Broadcom retimer. The Arista 7388X5 is also based on the Broadcom Tomahawk4 25.6T switch ASIC, with versions of the 7388X5 also utilizing a Credo chipset. They’re high-performance switches that transmit up to 25.6 Tbps and 10.6 Bpps with modular line cards. They support 128x 200G-FR4 QSFP56 optics modules and can maintain a consistent SerDes speed at the switch ASIC, the optics host interface, and on the optics line/wavelength. They simplify connectivity without needing a gearbox to convert data streams. They also have significantly reduced power per bit compared with their previous models (the OCP-accepted Meta Minipack and OCP-Inspired Arista 7368X4, respectively).

In addition to sharing key features of the Minipack2, the Arista 7388X5 offers hyperscale cloud scalability and flexible operating systems (it can support Arista EOS, FBOSS, and SONiC).

Looking toward the metaverse, and more

The metaverse will rely on many technologies, including advanced AI at scale. To deliver a diversity of new workloads that will be created as a result, we continue down the path of disaggregated global networks and data centers that will underpin all of this. The technologies that Meta and the wider industry will create will, of course, need to be fast and flexible, but more than that, they will need to operate efficiently and sustainably — from the data center all the way to edge devices. The only way to achieve this will be through collaboration through communities like OCP and other partnerships.

Open hardware drives the innovation necessary to reach these goals. And our collaborations with both long-standing and new vendors to create open designs for racks, servers, storage boxes, motherboards, and more will help push Meta and the wider industry onto the next major computing platform. We’re only about one percent along on the journey, but the road to the metaverse will be paved with open advanced networking hardware.

Acknowledgements

The authors would like to acknowledge the work across many teams within Meta, including the FBOSS, Network Hardware Engineering, DNE, and SOE teams. We would also like to thank our partners and their engineering teams for their close collaboration on these contributions.