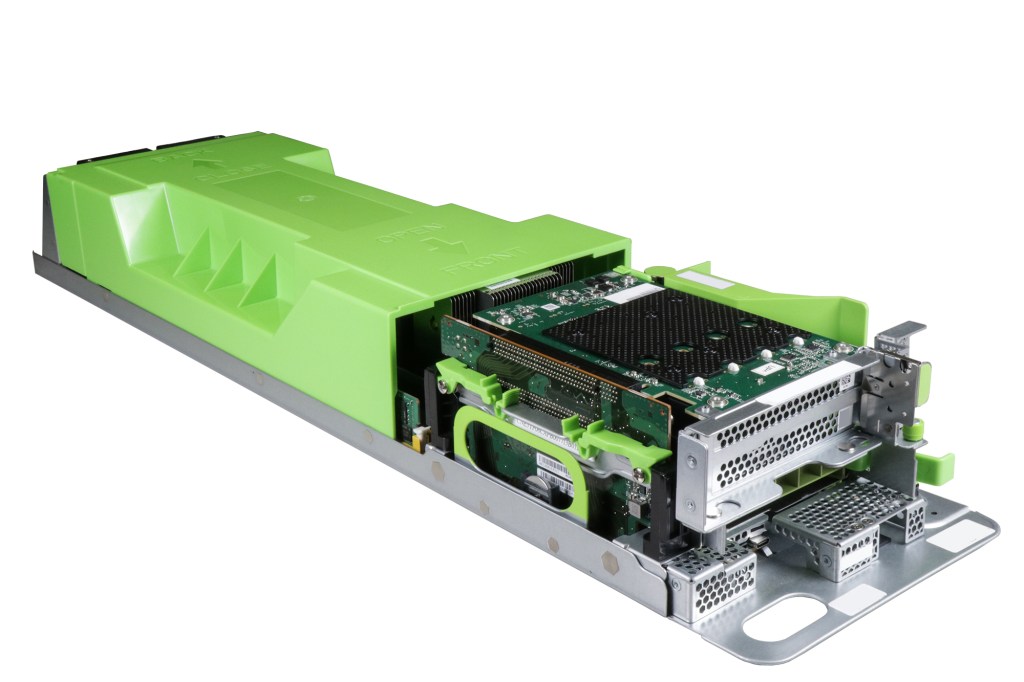

Peripheral component interconnect express (PCIe) hardware continues to push the boundaries of computing thanks to advances in transfer speeds, the number of available lanes for simultaneous data delivery, and a comparatively small footprint on motherboards. Today, PCIe connectivity-based hardware delivers faster data transfers and is one of the de facto methods to connect components to servers.

Our data centers contain millions of PCIe-based hardware components — including ASIC-based accelerators for video and inference, GPUs, NICs, and SSDs — connected either directly into a PCI slot on a server’s motherboard or through a PCIe switch like a carrier card.

As with any hardware, PCIe-based components are susceptible to different types of hardware-, firmware-, or software-related failures and performance degradation. The variety of components and vendors, array of failures, and the challenges of scale make monitoring, collecting data, and performing fault isolation for PCIe-based components challenging.

We’ve developed a solution to detect, diagnose, remediate, and repair these issues. Since we’ve implemented it, this methodology has helped make our hardware fleet more reliable, resilient, and performant. And we believe the wider industry can benefit from the same information, strategies, and help build industry standards around this common problem.

Our tools for addressing PCIe faults

First, let’s outline the tools we use:

- PCIcrawler: An open source, Python-based command line interface tool that can be used to display, filter, and export information about PCI or PCIe buses and devices, including PCI topology and PCIe Advanced Error Reporting (AER) errors. This tool produces visually appealing, treelike outputs for easy debugging as well as machine parsable json output that can be consumed by tools for deployment at scale.

- MachineChecker: An in-house tool for quickly evaluating the production worthiness of servers from a hardware standpoint. MachineChecker helps detect and diagnose hardware problems. It can be run as a command line input tool. It also lives as a library and a service.

- An in-house tool for taking a snapshot of the target host’s hardware configuration along with hardware modeling.

- An in-house utility service used to parse the custom dmesg and SELs to detect and report PCIe errors on millions of servers. This tool parses the logs on the server at regular intervals and records the rate of correctable errors on a file on the corresponding server. The rate is recorded per 10 minutes, per 30 minutes, per hour, per six hours, and per day. This rate is used to decide which servers have exceeded the configured tolerable PCIe-corrected error rate threshold depending on the platform and the service.

- IPMI Tool: An open source utility for managing and configuring devices that support the Intelligent Platform Management Interface (IPMI). IPMI is an open standard for monitoring, logging, recovery, inventory, and control of hardware that is implemented independent of the main CPU, BIOS, and OS. It’s mainly used to manually extract System Event Logs (SELs) for inspection, debugging, and study.

- The OpenBMC Project: A Linux distribution for embedded devices that have a baseboard management controller (BMC).

- Facebook auto remediation (FBAR): A system and a set of daemons that execute code automatically in response to detected software and hardware signals on individual servers. Every day, without human intervention, FBAR takes faulty servers out of production and sends requests to our data center teams to perform physical hardware repairs, making isolated failures a nonissue.

- Scuba: A fast, scalable, distributed, in-memory database built at Facebook. It is the data management system we use for most of our real-time analysis.

How we studied PCIe faults

The sheer variety of PCIe hardware components (ASICs, NICs, SSDs, etc.) makes studying PCIe issues a daunting task. These components can have different vendors, firmware versions, and different applications running on them. On top of this, the applications themselves might have different compute and storage needs, usage profiles, and tolerances.

By leveraging the tools listed above, we’ve been conducting studies to ameliorate these challenges and ascertain the root cause of PCIe hardware failures and performance degradation.

Some of the issues were obvious. PCIe fatal uncorrected errors, for example, are definitely bad, even if there is only one instance on a particular server. MachineChecker can detect this and mark the faulty hardware (ultimately leading to it being replaced).

Depending on the error conditions, uncorrectable errors are further classified into nonfatal errors and fatal errors. Nonfatal errors are ones that cause a particular transaction to be unreliable, but the PCIe link itself is fully functional. Fatal errors, on the other hand, cause the link to be unreliable. Based on our experience, we’ve found that for any uncorrected PCIe error, swapping the hardware component (and sometimes the motherboard) is the most effective action.

Other issues can seem innocuous at first. PCIe-corrected errors, for example, are correctable by definition and are mostly corrected well in practice. Correctable errors are supposed to pose no impact on the functionality of the interface. However, the rate at which correctable errors occur matters. And if the rate is beyond a particular threshold, it leads to a degradation in performance that is not acceptable for certain applications.

We conducted an in-depth study to correlate the performance degradation and system stalls to PCIe-corrected error rates. Determining the threshold is another challenge, since different platforms and different applications have different profiles and needs. We rolled out the PCIe Error Logging Service, observed the failures in the Scuba tables, and correlated events, system stalls, and PCIe faults to determine the thresholds for each platform. We’ve found that swapping hardware is the most effective solution when PCIe-corrected error rates cross a particular threshold.

PCIe defines two error-reporting paradigms: The baseline capability and the AER capability. The baseline capability is required of all PCIe components and provides a minimum defined set of error reporting requirements. The AER capability is implemented with a PCIe AER extended capability structure and provides more robust error reporting. The PCIe AER driver provides the infrastructure to support PCIe AER capability and we leveraged PCIcrawler to take advantage of this.

We recommend that every vendor adopt the PCIe AER functionality and PCIcrawler rather than relying on custom vendor tools, which lack generality. Custom tools are hard to parse and even harder to maintain. Moreover, integrating new vendors, new kernel versions, or new types of hardware requires a lot of time and effort.

Bad (down-negotiated) link speed (usually running at 1/2 or 1/4 of the expected speed) and bad (down-negotiated) link width (running at 1/2, 1/4, or even 1/8 of the expected link width) were other concerning PCIe faults. These faults can be difficult to detect without some sort of automated tool because the hardware is working, just not as optimally as it could.

Based on our study at scale, we found that most of these faults could be corrected by reseating hardware components. This is why we try this first before marking the hardware as faulty.

Since a small minority of these faults can be fixed by a reboot, we also record historical repair actions. We have special rules to identify repeat offenders. For example, if the same hardware component on the same server fails a predefined number of times in a predetermined time interval, after a predefined number of reseats, we automatically mark it as faulty and swap it out. In cases where the component swap does not fix it, we will have to resort to a motherboard swap.

We also keep an eye on the repair trend to identify nontypical failure rates. For example, in one case, by using data from custom Scuba tables and their illustrative graphs and timelines, we root-caused a down-negotiation issue to a specific firmware release from a specific vendor. We then worked with the vendor to roll out new firmware that fixed the issue.

It’s also important to rate-limit remediations and repairs as a safety net to prevent bugs in the code from mass draining and unprovisioning, which can result in service outages if not handled properly.

Using this overall methodology, we’ve been able to add hardware health coverage and fix several thousand servers and server components. Every week, we’ve been able to detect, diagnose, remediate, and repair various PCIe faults on hundreds of servers.

Our PCIe fault workflow

Here’s a step-by-step breakdown of our process for identifying and fixing PCIe faults:

- MachineChecker runs periodically as a service on the millions of hardware servers and switches in our production fleet. Some of the checks include PCIe link speed, PCIe link width, as well as PCIe-uncorrected and PCIe-corrected error rate checks.

- For a particular PCIe endpoint, we find its parent called upstream using PCIcrawler’s PCIe topology information. We consider both ends of a PCIe link.

- We leverage PCIcrawler’s output, which in turn depends on the generic registers LnkSta, LnkSta2, LnkCtl, and LnkCtl2.

- We calculate expected speed as:

expected_speed = min (upstream_target_speed, endpoint_capable_speed, upstream_capable_speed). - We calculate current_speed as:

current_speed = min (endpoint_current_speed, upstream_current_speed). - current_speed must be equal to expected_speed.

In other words, we should have the current speed of either end be equal to the minimum of the capable speeds, upstream capable, downstream capable, and upstream target speed. - For PCIe link width, we calculate expected_width as:

expected_width = min(pcie_upstream_device capable_width, pcie_endpoint_device capable width). - If the expected_width is less than the current width of the upstream, we flag this as a bad link.

- The PCIe Error Logging Service independently runs on our hardware servers and independently records the rate of corrected and uncorrectable errors and their rates in a predetermined format (json).

- MachineChecker checks for uncorrected errors. Even a single uncorrected error event qualifies a server as faulty.

- During its periodic run, MachineChecker also looks up the generated files on the servers and checks them against a prerecorded source of truth in Configerator (our configuration management system) for a threshold per platform. If the rate exceeds a preset threshold, the hardware is marked as faulty. These thresholds are easily adjustable per platform.

- We also leverage PCIcrawler, which is also preinstalled on all our hardware servers, to check for PCIe AER issues.

- We leverage our in-house tool’s knowledge of hardware configuration to associate a PCIe address to a given hardware part.

- MachineChecker uses PCIcrawler (for link width, link speed, and AER information) and the PCIe Error Parsing Service (which in turn uses SEL and dmesg) to identify hardware issues and create alerts or alarms. MachineChecker leverages information from our in-house tool to identify the hardware components associated with the PCIe addresses and assists data center operators (who may need to swap out the hardware) by supplying additional information, such as the component’s location, model information, and vendor name.

- Application production engineers can subscribe to these alerts or alarms and customize workflows for monitoring, alerting, remediation, and custom repair.

- A subset of all the alerts can undergo a particular remediation. We can also fine-tune the remediation and add special casing, restricting the remediation to, for example, a firmware upgrade if a particular case is well known.

- If the remediation fails sufficiently, a hardware repair ticket is automatically created so that the data center operators can swap the bad hardware component or server with a tested good one.

- We have rate limiting in several places as a safety net to prevent bugs in the code or mass draining and unprovisioning, which can result in service outages if not handled properly.

We’ve added hardware health coverage and fixed several thousand servers and server components with this methodology. We continue to detect, diagnose, remediate, and repair hundreds of servers every week with various PCIe faults. This has made our hardware fleet more reliable, resilient, and performant.

We’d like to thank Aaron Miller, Aleksander Książek, Chris Chen, Deomid Ryabkov, Wren Turkal and many others who contributed to this work in different aspects.