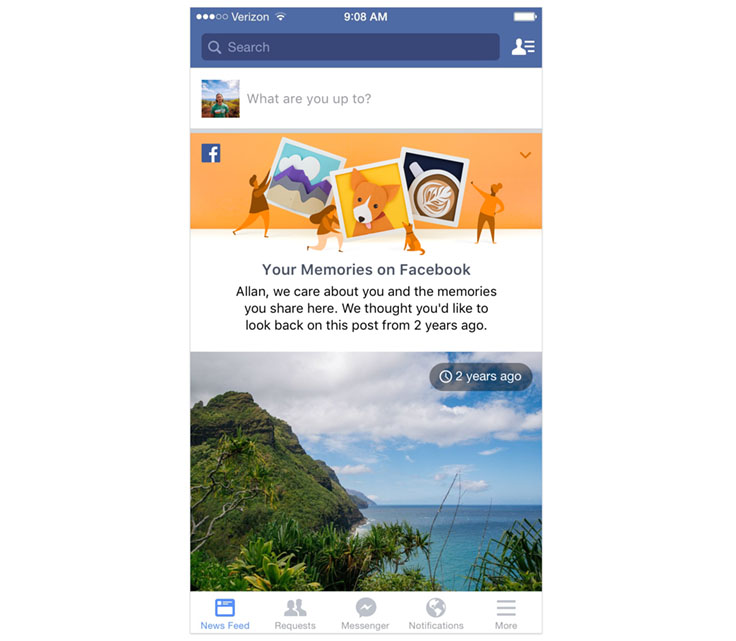

One year ago, we launched On This Day to make it easier to relive and share memories on Facebook. Since launch, On This Day has been used by hundreds of millions of people globally.

Research and engineering

Because memories are so personal and unique, we wanted to make sure On This Day shows people the memories they most likely want to see and share, especially when it comes to the memories they see in News Feed.

Specifically, over the past year, we focused on three areas to optimize the product experience: user experience research, filtering, and ranking.

User experience (UX) research

To better understand what types of memories are best to show people in News Feed, we listened to feedback from people through UX research. In these sessions, we learned just how complex memories can be. Things that might have been meaningful or pleasant when they were first posted can turn into unpleasant or less important memories over time, and vice versa. While we can’t predict how a person will react to seeing a specific piece of content years later, we can apply some of the technologies we have — such as filtering and ranking algorithms — to increase the chances of showing you something that is meaningful and relevant, and that you actually want to reflect on and share.

Filtering and control

Based on user research, we knew that there were certain kinds of content people would rather not see in On This Day memories. Using this feedback, we developed a set of automatic filters for On This Day that are applied to the memories we surface in News Feed. For example, we automatically filter out memories with exes or people who are blocked. We also filter out memories that a person dismissed so that we won’t show them again in the future.

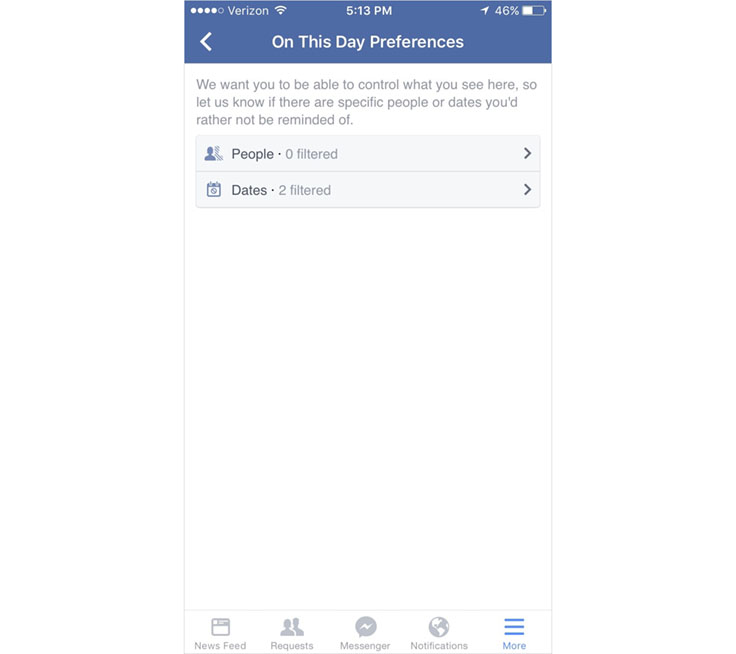

In addition to the automatic filters we apply, we also heard feedback that people wanted more control over the memories they see. We responded by building Preferences — a feature that allows people to blocklist specific people or dates across the On This Day experience (News Feed stories, the On This Day page, and notifications). Preferences are available on desktop and will be rolling out on mobile in the coming weeks.

Ranking

After applying automatic filters and the person’s preferences, we then rank potential memories so we can surface the most meaningful ones in the form of a News Feed story. The memories are ranked by a machine-learning model that we developed at Facebook. This model is trained in real time and learns continuously; it gets better and more accurate at predicting what memories people want to see as they interact more with On This Day. The training uses signals that fall into two categories: (1) personalization and (2) content understanding.

Personalization

We use signals that allow us to tailor and customize the On This Day experience for each person. These signals include a person’s previous interactions with On This Day (e.g., shares, dismisses), their demographic information (e.g., age, country), and the attributes of the memory (e.g., content type, number of years ago). For example, if a person has shared many memories from On This Day in the past, we can dial up the number of memories we show them in News Feed in the future. Similarly, if a person has dismissed many memories, then we reduce the number of On This Day stories they see in News Feed moving forward.

Content understanding

The On This Day experience also relies on a computer vision platform, a core technology that powers media understanding at Facebook. This engine, developed by the Computer Vision team inside the Applied Machine Learning organization, leverages state-of-the-art computer vision and machine-learning techniques to better understand what is in a particular photo or video. It is built on top of a deep convolutional neural network trained on millions of examples and is capable of recognizing a wide variety of visual concepts. It can detect objects, scenes, actions, places of interest, whether a photo or video contains objectionable content, and more. It also has the capability to learn new visual concepts within minutes and to apply them to incoming photos and videos. This allows us to know that a photo shows a cat, is about skiing, was taken at the beach, includes the Eiffel Tower, and so on.

Combined with findings from UX research, we learned that understanding what is in a memory is very important for surfacing the right memory in News Feed. Specifically, we heard from people about the types of memories they are likely to appreciate. Based on this knowledge, we use signals from the computer vision platform to rank the content.

What’s next

At one year old, On This Day is still a very new feature. In the last year, we’ve learned a lot about how people want to interact with memories, and we’ve applied these learnings carefully in our engineering efforts. Not only have we seen the product grow to be used by hundreds of millions of people, but we’ve also seen how personalized it has become, thanks to the filtering and ranking algorithms we’ve developed and applied.

That said, we are only 1 percent finished. As we enter the second year of On This Day, we are planning to grow the product and improve the experience for the people who use it. We plan to make On This Day available to more people by surfacing more types of memories. As for the user experience, we plan to improve user controls and continue to refine our ranking algorithm by adding more input signals. Finally, we will continue to learn more about what makes memories meaningful by continuing to listen to feedback from people.

Accessing On This Day

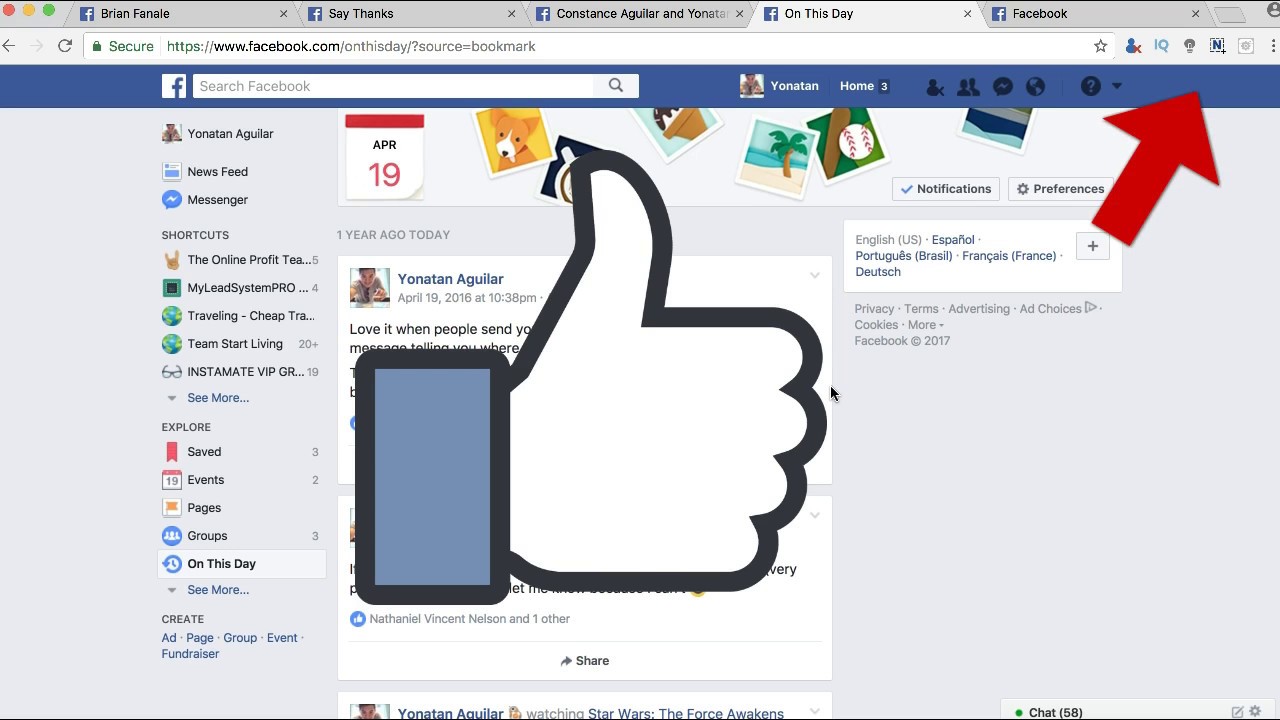

You can view your On This Day page by clicking on the On This Day bookmark, searching for “On This Day,” or visiting facebook.com/onthisday on desktop and mobile. From the On This Day page, you can subscribe to notifications, which will remind you whenever you have memories to look back on. You might might also see a story in your News Feed that shows one of your memories for that day.

Thanks to the people across the product and infra teams — especially Memories & Celebrations, Applied Machine Learning, and Growth — who contributed to this project. In an effort to be more inclusive in our language, we have edited this post to replace blacklist with blocklist.