Facebook’s mission is to help people connect with one another, and as our 10th anniversary approached last month, we wanted to do something that would let everyone participate in the event together. After some discussion, we settled on the Look Back feature, which allows people to generate one-minute videos that highlight memorable photos and posts from their time on Facebook.

The feature proved to be a hit: people watched and shared hundreds of millions of these videos in the first few days following the launch on February 4. From an engineering perspective, Look Back proved to be a very interesting challenge – in no small part because we had to build it in less than a month.

How it began

In early January, we decided to move forward with the idea of creating Look Back videos. At that point, only a handful people at Facebook knew of the concept, and we had only a faint idea of how we would make more than a billion videos in 25 days.

We knew from the start that there would be some big challenges in building it this quickly, and at this scale. Infrastructure engineers started to think about storage requirements, computing capacity and network bandwidth. Questions about the videos themselves needed to be answered. How would we animate photos for a billion people? How could we make the videos feel personal and present them in a way that would resonate?

In addition, this was already a busy time for Facebook’s infrastructure team – our new Paper app would launch just five days before February 4, and a week later the site would be inundated with activity from the start of the 2014 Winter Olympics.

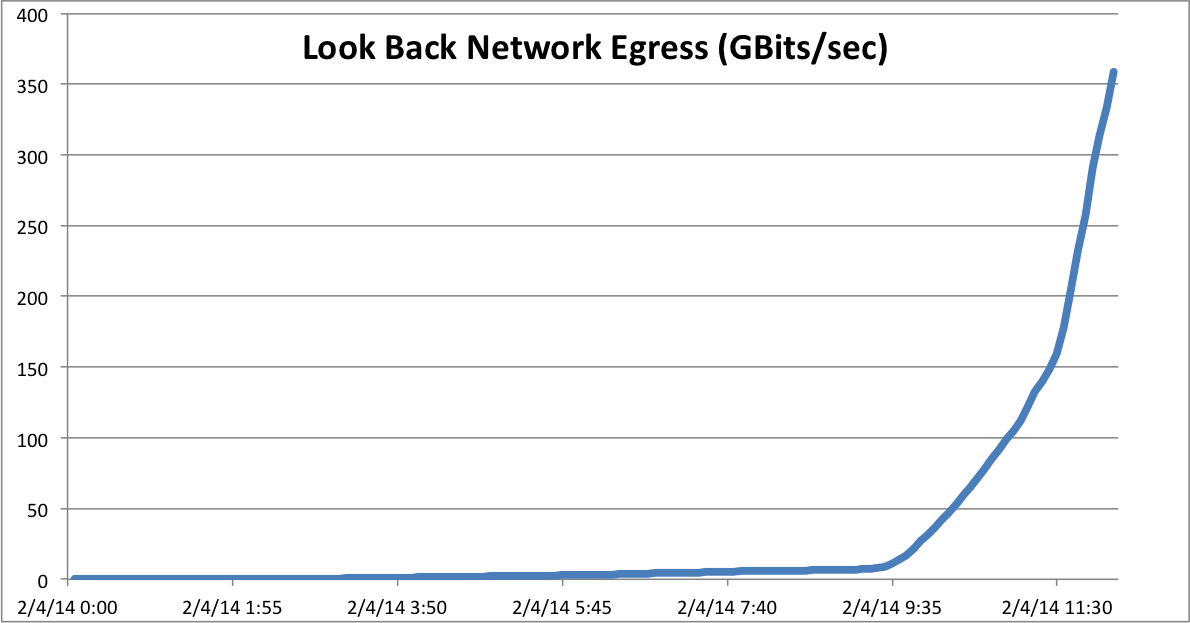

To plan for even more load, we began with some educated guesses, put them through some back-of-the-envelope math, and decided to plan for 25 million videos shared within a day of the anniversary. We estimated that for every video shared, five people would watch that video. Multiplying those views by the video size, dividing by seconds in a day, we calculated that we would need an average additional bandwidth of about 62 Gbps. Since traffic can be 2-3x higher at peak, we actually needed to plan for 187 Gbps. That’s more than 20,000 average US high-speed internet connections, fully saturated all the time. Our network engineers had some work to do.

To store videos for everyone, we would need an estimated 25 petabytes of disk space. Visualized as a stack of standard 1TB laptop drives, this would be a tower measuring more than 775 feet tall – two-thirds the height of the Empire State Building. We couldn’t make the videos without having this space, so the disks had to be procured within a week or two at the most.

To add to the suspense, the amount of computing power needed to render the videos was still an unknown.

Render faster, store better

When we kicked off the project, we could generate videos for only about 7 million people per day – and at that rate we would need months to render all the videos we needed. We had less than three weeks to go at this point.

How could we expedite the process? It was time to divide and conquer. We quickly split the entire pipeline into smaller pieces that could evolve in parallel, and many volunteers jumped in to figure out how to tackle the following tasks:

- Store the videos

- Obtain enough computing capacity

- Stay within the electrical power limits of our data centers

- Ensure sufficient network bandwidth (internal and to the Internet)

- Choose compelling photos and status updates for the videos

- Distribute and schedule the video rendering jobs

- Animate the content, and render the videos

- Design and build the web and mobile front-ends

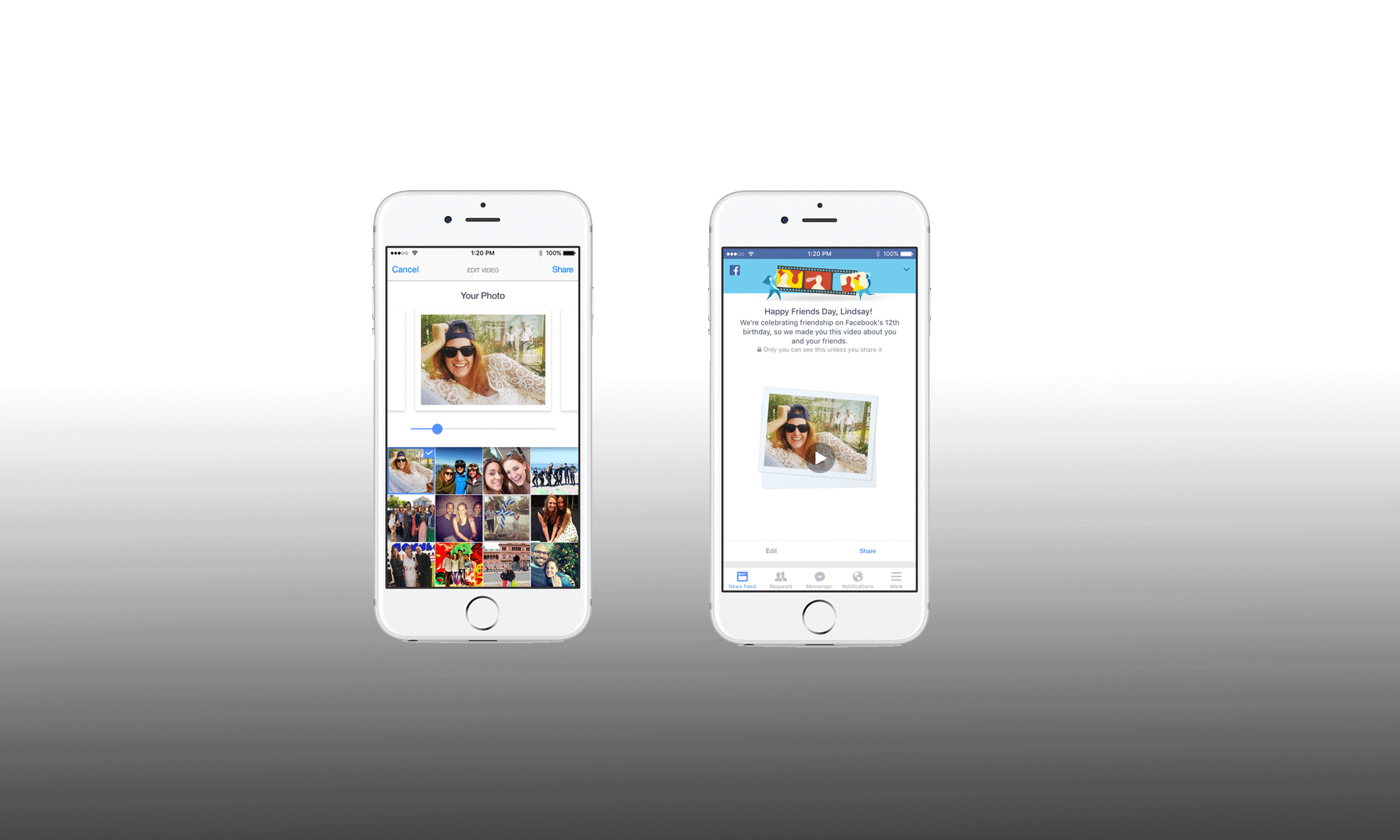

- Design and build an editing interface to let people customize their videos

- Enable the edited videos to be rendered quickly on-demand

- Manage the deployment of all these software iterations

For storage, we reviewed how our Haystack media servers were configured, including their geographical location in the US. Particularly important was the need to prevent disruptions to normal site operations. So we decided to isolate these videos in their own servers across both US coasts and treat them as different video types than regular uploads. Additionally, we decided to keep fewer archived copies of the Look Back videos to save space and time.

But even if we had every drive in the system working in perfect unison, we would easily saturate the top of rack switches moving all that data in and out. The easiest solution to this problem was to simply deploy more hardware, which meant we had to find, provision, configure, and deploy even more racks to spread the read/write workload across more network switches. For compute, although we had tens of thousands of servers available, our data center power efficiency plans were not designed for all of them to be running at full capacity at all times. We decided to track power usage while the jobs were running and added dials to the software to allow us to slow down if needed.

With the initial setup in place, we tested the end-to-end pipeline with a prototype of the Look Back feature on about 150 employees. We continued to secure more capacity for the feature, and the team made hourly code changes and re-rendered videos to ensure that the software worked consistently and reliably for everyone. On January 24, we launched the Look Back videos internally to all Facebook employees. While there were still some bugs at this point, the feedback on the feature was overwhelmingly positive, and more people pitched in to help scale it.

Concurrently, a second team of engineers turned their attention to another issue: ensuring that the Look Back tool wouldn’t disrupt the rest of Facebook. We began by monitoring power on the capacity we were claiming across our data centers. We usually understand application behavior and can identify power limits, but given our deadline, we knew we would be pushing the envelope. Once we determined that storage was holding up and the thousands of servers that had been provisioned were ready to work, we alerted our CDN partners to expect a high volume of traffic.

With just a few days to go, we realized that we had a chance to hit our target – what had originally been estimated as a six-month task was being compressed into a 96-hour all-out assault.

By February 3, we’d done it. We had all the videos ready for launch, and we had accomplished it a day early. Over the previous six days, we watched the space used in Haystack grow from 0 bytes to 11 petabytes, rendering over 9 million videos per hour at our fastest. We ended up needing less than half of the space we estimated for two reasons: we were able to reduce the replication factor for these videos, since they became easier to regenerate, and we had managed to optimize the video size without compromising quality.

Happy birthday, Facebook! Let’s ship!

Finally, on February 4, we shipped. People saw the new feature, checked out their videos, and rapidly began sharing those videos with friends.

We had anticipated that about 10% of the people who saw their video would share it. Instead, to our surprise, well over 40% of the viewers were sharing. As much stress as this was putting on our infrastructure, it also revealed the answer to the one question we couldn’t predict in advance: would the videos be a hit? In just a few hours, it was clear that the answer was a resounding yes. A few hours after launch, the outgoing network capacity was nearly double our projected peak.

Figure: Outgoing bandwidth for Look Back videos

Shortly after 12:00 pm Pacific time, Look Back videos were going out at over 450 Gbps – which is big even by Facebook standards.

When all was said and done, we took a step back to look at the numbers:

- Over 720 million videos rendered, with 9 million videos rendered per hour

- More than 11 petabytes of storage used

- More than 450 Gbps outgoing bandwidth at peak and 4 PB egress within days

- Over 200 million people watched their Look Back movie in the first two days, and more than 50% have shared their movie.

And all of this came without a single power breaker tripping – which made our data center teams very happy.

30 teams working as one

No official team owned the Look Back feature; it came about only because dozens of people from across the company came together to do something they found meaningful and important.

Normally, our hardware teams plan months in advance and work very deliberately. In January, they suddenly needed to move tens of thousands of computers to new tasks overnight. Anytime servers are provisioned, a small percentage will fail and need maintenance. Dealing with those failures can be time-consuming, since a person often has to physically walk to a computer inside a huge data center, troubleshoot it, and replace any failed parts. The data center teams stayed around the clock to make sure that every provisioned machine was working properly.

This snowball effect was not new for successful projects at Facebook. For Look Back, two things made it possible: first, Facebook employees are empowered to work on the things they believe are important. Second, the social fabric of the company is so strong that everyone knew other people on the impromptu team — nobody had to walk into a roomful of strangers. For the dozens of people orienting themselves in a new and rapidly moving effort, the friendly energy made all the difference.

The Look Back project represented the things we love about Facebook culture: coming up with bold ideas, moving fast to make them real, and helping hundreds of millions of people connect with those who are important to them. Happy birthday, Facebook! Here’s to many more.

Contributors: Aaron Theye, Adam Sprague, Alex Kramarov, Alexey Spiridonov, Alicia Dougherty-Wold, Allan Huey, Amelia Granger, Andrey Goder, Andrew Oliver, Andrew Zich, Angelo Failla, Ankur Dahiya, Austin Dear, Bill Fisher, Bill Jia, Cameron Ewing, Carl Moran, Chelsea Randall, Colleen Henry, Cory Hill, Dan Cernogorsky, Dan Lindholm, David Callies, David Swafford, Doug Porter, Elizabeth Gilmore, Emir Habul, Eric Bergen, Ernest Lin, Eugene Boguslavsky, Fabio Costa, Felipe van de Wiel, Garo Taft, Gary Briggs, Goranka Bjedov, Hussain Ali, James March, Jeff Qin, Jimmy Williams, Joe Gasperetti, John Fremlin, Jonathon Paul, Josh Higgins, Kaushik Iyer, Key Shin, Khallil Mangalji, Kowshik Prakasam, Krish Bandaru, Kyre Osborn, Ladi Prosek, Mathieu Henaire, Matt Rhodes, Margot Merrill Fernandez , Marc Celani, Mark Drayton, Mark Konetchy, Mike Nugent, Mike Yaghmai, Nate Salciccioli, Neil Blakey-Milner, Nick Kwiatek, Niha Mathur, Omid Aziz, Paul Barbee, Peter Jordan, Peter Zich, Pradeep Kamath, Raylene Yung, Rebecca Van Dyck, Richard Nessary, Robert Boyce, Santosh Janardhan, Sarah Hanna, Scott Trattner, Shu Wu, Skyler Vander Molen, Trisha Rupani, Vihang Mehta, Viswanath Sivakumar, Wendy Mu, Yusuf Abdulghani