WHAT THE RESEARCH IS:

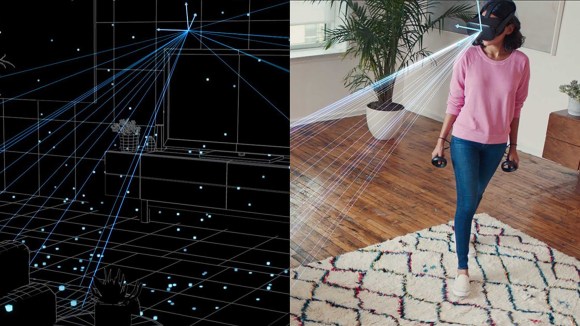

A new AI-powered framework for rendering natural, realistic focus effects in VR. DeepFocus works with advanced prototype headsets, rendering blur in real time and at various focal distances. For example, when someone wearing a DeepFocus-enabled headset looks at a nearby object, it immediately appears crisp and in focus, while background objects appear out of focus, just as they would in real life. This defocused blur (also known as retinal blur) is important for achieving realism and depth perception in VR. DeepFocus is the first system able to produce this effect in real time for VR applications. We are now open-sourcing our work and data set to help others in the VR research community.

HOW IT WORKS:

Some traditional approaches, such as using an accumulation buffer, can achieve physically accurate defocused blur. But they can’t produce the effect in real time for sophisticated, rich content, because the processing demands are too high for even state-of-the-art chips. Instead, we solved this problem using deep learning. We developed a novel end-to-end convolutional neural network that produces the image with accurate retinal blur as soon as the eye looks at different parts of a scene. The network includes new volume-preserving interleaving layers to reduce the spatial dimensions of the input while fully preserving image details. The convolutional layers of the network then operate on the same, reduced spatial resolution, with significantly reduced runtime.

WHY IT MATTERS:

As research into new VR headset technologies advances, DeepFocus will be able to simulate the accurate retinal blur needed to produce extremely lifelike visuals. The platform also demonstrates that AI can help solve the challenges of rendering highly compute-intensive visuals in VR. DeepFocus provides a foundation to overcome practical rendering and optimization limitations for future novel display systems.

Because DeepFocus relies only on standard RGB-D color and depth input, it can work with nearly all existing VR games and applications. It is also compatible with all three types of accommodation-supporting headsets currently being explored in the VR research community: varifocal displays (such as Half Dome), multifocal displays (for example, this prior work by FRL), and light field displays.

Learn more about how Facebook Reality Labs created DeepFocus.

READ THE FULL PAPER:

DeepFocus: Learned Image Synthesis for Computational Displays