Games provide a useful environment for artificial intelligence research. Within a game environment, the amount of labeled training data available for training AI models is nearly infinite, low-cost, replicable, and more easily obtained at a much higher rate than in real-world experiments. These attributes are helping facilitate research underway in Facebook’s AI Research lab (FAIR) to explore both short-term milestones like demonstrating the strength of AI in multiple complicated game environments, and long-term goals aimed at applying AI to real-world challenges. Game research helps us build models for AI that can plan, reason, navigate, solve problems, collaborate, and communicate.

Despite the many benefits of using games for training, it can be difficult for individuals to conduct AI research in a game environment. Due to the limitations of many current learning algorithms, hundreds of thousands of rounds of gameplay are required, which is not possible without a sufficient supply of computational resources, e.g., high-performance computing platforms equipped with many CPUs, GPUs, or specialized hardware. Furthermore, the relevant algorithms are complex and delicate to tune. These problems compound as the complexity of the training environment increases and multiple AI agents are introduced.

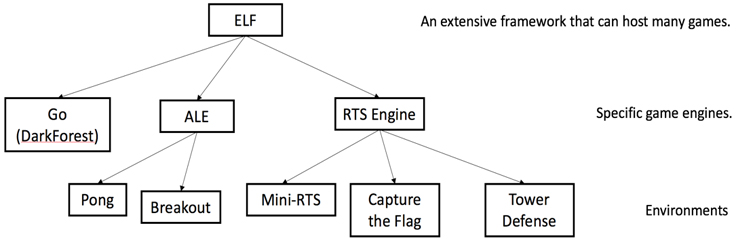

To address these issues and democratize AI research efforts, the FAIR team has created ELF: an Extensive, Lightweight, and Flexible platform for game research. ELF allows researchers to test their algorithms in various game environments, including board games, Atari games (via the Arcade Learning Environment), and custom-made, real-time strategy games. Not only does it run on a laptop with GPU, it also supports training AI in more complicated game environments, such as real-time strategy games, in just one day using only six CPUs and one GPU.

We’ve designed the interface to be easy to use: ELF works with any game with a C/C++ interface, and automatically handles concurrency issues like multithreading/multiprocessing. Additionally, we designed a clean Python user interface that provides a batch of game states ready for training. ELF also supports uses beyond gaming and can be a platform for general world simulators including physics engines.

We are excited to make the technical details and code available via open source for the broader AI research community to use. A pre-print of our paper on ELF can be found on arXiv.

Architecture

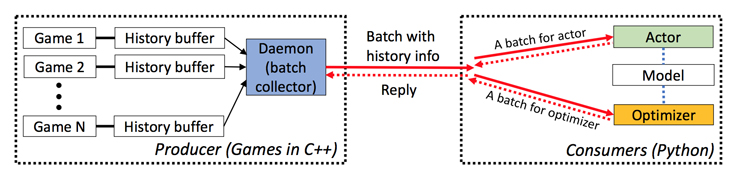

The architecture of ELF is relatively simple. ELF consists of a simulator that can host several concurrent game instances running at the same time on the C++ side, while simultaneously communicating with the AI models (deep learning, reinforcement learning, etc.) on the Python side.

In a departure from other existing game AI platforms that wrap a single game in one interface, ELF wraps a batch of games into one Python interface. This enables models and reinforcement algorithms to obtain a batch of game states in every iteration, which decreases the amount of time needed to train models.

We’ve also built in pairing flexibility between game instances and actor models. With this framework, it is now easy to pair a particular game instance with an actor model, or one instance with many actor models, or many instances with one actor model. Such flexibility offers rapid prototyping of algorithms, which helps researchers more quickly understand which models have the best performance.

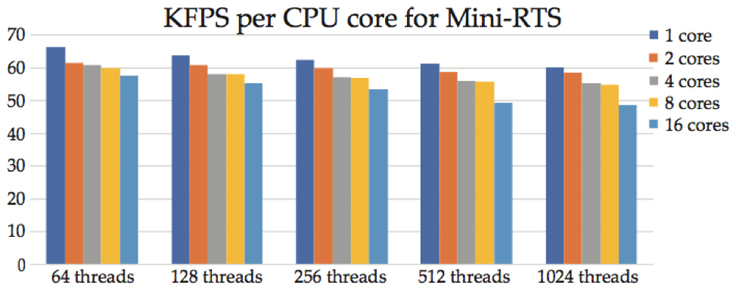

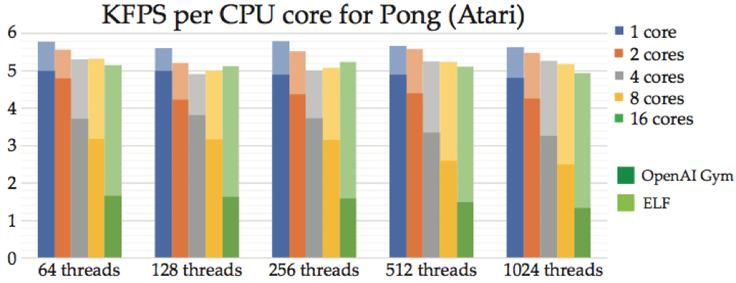

In our early experiments, ELF performed faster simulation and delivers nearly 30 percent faster training speed than OpenAI Gym in Atari Games, using the same amount of CPUs and GPU. When adding more cores, ELF’s frame rate per core remains stable.

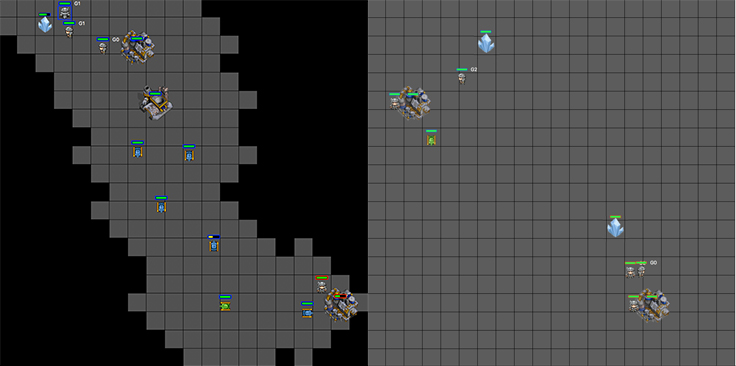

Mini-RTS: The real-time strategy game for research

Included with the ELF platform is a real-time strategy engine and environment called Mini-RTS. We wrote Mini-RTS to help test ELF. It is very fast; the game environment runs 40,000 frames per second per core on a Macbook Pro. It captures the key dynamics of a real-time strategy game: Both players gather resources, build facilities, explore unknown territory (terrain that is out of sight of the player), and attempt to control regions on the map. In addition, the engine has characteristics that facilitate AI research: perfect save/load/replay, full access to its internal game state, multiple built-in rule-based AIs, visualization for debugging, and a human-AI interface, among others. As a baseline, AIs we’ve trained on Mini-RTS have shown promising results, beating the built-in AI agent 70 percent of the time. These results show that it is possible to train AI to accomplish tasks and prioritize actions in relatively complex strategy environments.

With the ELF platform, we are looking forward to conducting research focused on helping computers develop approaches to handle exponential action space, long-delayed rewards, and incomplete information.