Facebook Marketplace was introduced in 2016 as a place for people to buy and sell items within their local communities. Today in the U.S., more than one in three people on Facebook use Marketplace, buying and selling products in categories ranging from cars to shoes to dining tables. Managing the posting and selling of that volume of products with speed and relevance is a daunting task, and the fastest, most scalable way to handle that is to incorporate custom AI solutions.

On Marketplace’s second anniversary, we are sharing how we use AI to power it. Whether someone is discovering an item to buy, listing a product to sell, or communicating with a buyer or seller, AI is behind the scenes making the experience better. In addition to the product index and content retrieval systems, which leverage our AI-based computer vision and natural language processing (NLP) platforms, we recently launched some new features that make the process simpler for both buyers and sellers.

For buyers, we use computer vision and similarity searches to recommend visually similar products (e.g., suggesting chairs that look similar to the one the buyer is viewing) and the option to have listings translated into their preferred language using machine translation. For sellers, we have tools that simplify the process of creating product listings by autosuggesting the relevant category or pricing, as well as a tool that automatically enhances the lighting in images as they’re being uploaded.

Building a product index

Visitors to Marketplace seamlessly interact with these AI-powered features from the moment they arrive to buy or sell something. From the very first search, results are recommended by a content retrieval system coupled with a detailed index that is compiled for every product. Since the only text in many of these listings is a price and a description that can be as brief as “dresser” or “baby stuff,” it was important to build in context from the photos as well as the text for these listings. In practice, we’ve seen a nearly 100 percent increase in consumer engagement with the listings since we rolled out this product indexing system.

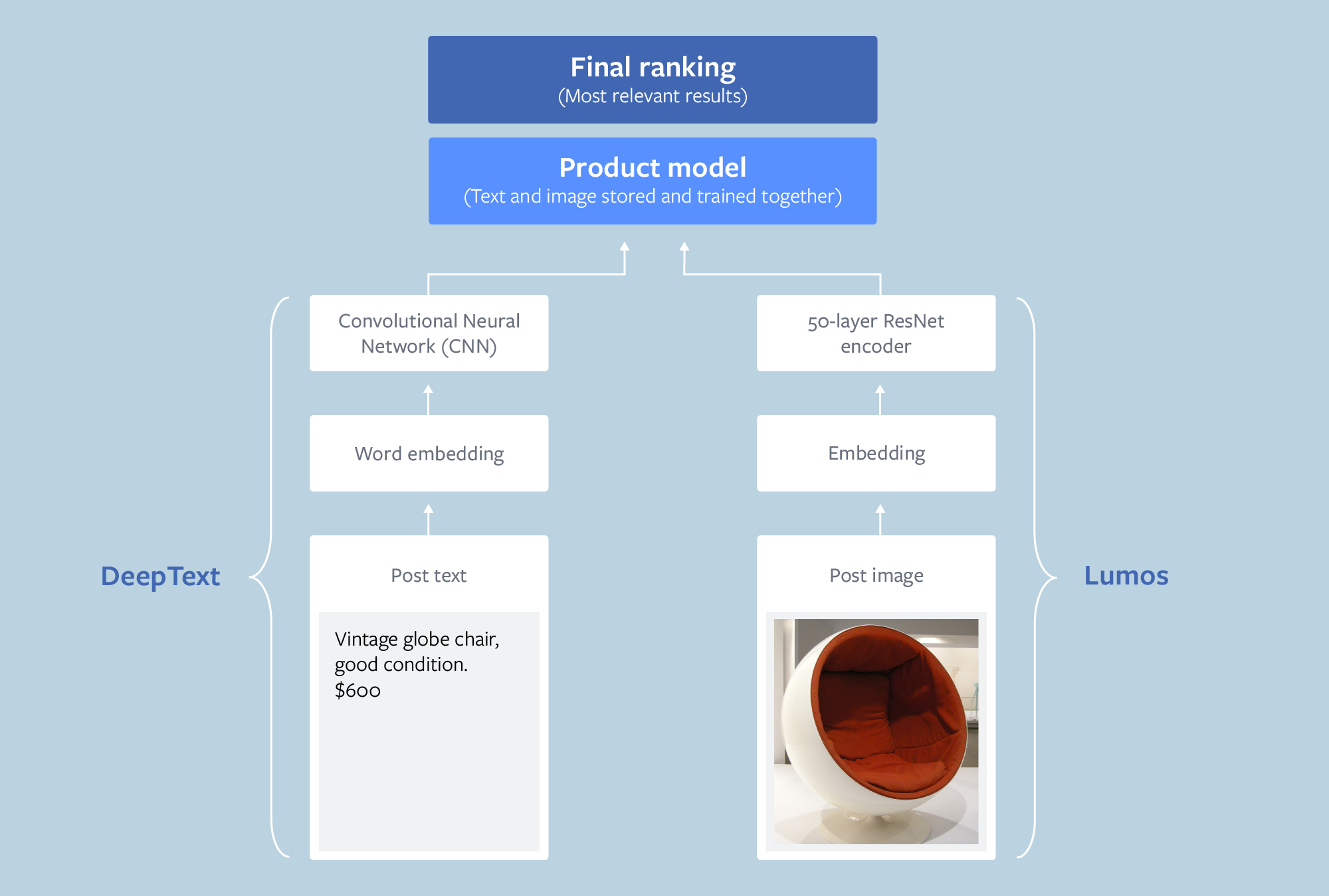

To accomplish this, we built a multimodal ranking system that incorporates Lumos, our image understanding platform, and DeepText, our text understanding engine. As a result, the index for each product includes layers for both the text and the images. To model the word sequence, we map each word from the title and description to an embedding and then feed them into the convolutional neural networks. The image portion comes from a 50-layer ResNet encoder pretrained on the image classification task. The output of the image encoder and the text embedding are concatenated and sent into a multilayer perceptron, where they can be trained and stored together as the complete product model.

How the text and image of a product listing are trained and stored together as a product model.

To understand the relationship between buyer activity and product content, the system also incorporates a model for the buyer, created with embeddings using the demographic information from the person’s Facebook profile and keywords from searches within Marketplace. The system computes the cosine similarity between the two models as a ranking score, quantifying how closely consumer and product data line up. Using the same models, we are able to use the information gleaned from text, photos, and even logos to proactively detect and remove listings that violate our policies, which allows us to keep the platform safer.

At this scale, there is a massive product inventory to scan for buyer relevance, so we needed a lightweight computation solution. Using similarity searches reduces the computing load and speeds up the retrieval of relevant products. For these we use Facebook AI similarity search (FAISS), a library for efficient similarity search and clustering of dense vectors. After we get the retrieval result, we perform a final ranking using a Sparse Neural Network model with online training. Because the ranking stage model uses more data and more real-time signals (such as the counters of the real-time events that happened on each of the products), it is more accurate — but it’s also computationally heavier, so we do not use it as the model at the retrieval stage; we use it once we’ve already narrowed down the list of all possible items.

Using more similarity searches and Lumos, we recently launched another feature to recommend products based on listing images. So if a buyer shows interest in a specific lamp that is no longer available, the system can search the database for similar lamps and promptly recommend several that may be of interest to the buyer.

Improving AI models at scale

To process a database of millions of products at the speed required for an online marketplace, we had some challenges to solve. It took 4 million products to train neural network models on both text and images. To process that volume of images quickly enough to access the data from them and “see” the item to suggest categories, relevance, etc., in real time, we had to speed things up. We used distributed training across multiple machines at once. We partitioned training data and parallelized net execution across replicated workers. Each worker maintained a full copy of the model and synchronized model updates periodically to train a converged model. This sped the training process from more than a week to just 1-2 days.

Even once the distributed model was fully trained and deployed, we found that in practice, the number of queries per second (QPS) that the model could process still needed to be significantly higher. To increase this, we looked to improve parallelism in the service, tuning the number of classification threads from four to 12 to 20, which gave us an increase in both QPS and CPU utilization without the undesirable increase in latency. To keep service balanced across nodes, we moved the tier into a shared pool using the new Broadwell Type I servers. This gave us better memory and bandwidth, and eliminated the need for non-uniform memory access (NUMA).

With the system utilization data collected during the test runs, we also observed that the service had a high number of system calls, which indicates jmalloc is purging too aggressively, so we changed the following in our service tier config:

env_variables={ 'MALLOC_CONF': 'opt.decay_time:-1' },

By reducing CPU utilization on memory purging, we were able to serve more categorization requests per host and lower the total number of machines needed for the service.

Simplifying the process for sellers

We are also using AI to improve the seller experience. One feature automatically enhances the lighting for photos as the seller uploads them, making the images easier for buyers to see what’s pictured. And as the listing is being created, the system compares it with similar products in the database and offers up pricing and category suggestions. This autosuggest model runs on each of the millions of for-sale listings on Marketplace and classifies each listing into one of the 29 top categories at post time, giving users the option to keep or change the suggested category.

Before the autosuggest feature for categories was enabled, friction was high: 7 to 9 percent of sellers abandoned the process without completing the listing, and the seller-selected categories were sometimes less effective than those recommended by our model.

With this architecture in place, the system could someday autosuggest the most effective listing title based on comparable products, automatically enhance a photo’s resolution to reduce blurriness, or even warn a seller when it detects a title that doesn’t match the image.

Video walkthrough of the seller experience on Marketplace.

Enriching buyer and seller interactions

Messenger allows buyers and sellers to communicate about a product directly. When chatting in Messenger, M, an automated assistant powered by AI, makes suggestions to help people communicate and get things done. For example, in a 1:1 conversation, when a seller is asked a question such as “Is the bicycle still available?” M can suggest replies like “Yes,” “No,” or “I think so.”

If the answer is no, the product is no longer available, then M may prompt the buyer with a suggestion to “Find more like this on Marketplace” with a link to a page of items similar to the one the buyer was asking about. This list is compiled using our similarity search functionality.

If M detects that a person received a message in a language that is different from his or her default language, it will offer to translate this message. Messages are translated using our neural machine translation platform, the same technology that translates posts and comments on Facebook and Instagram.

All these suggestions are automatically generated by an AI system that was trained via machine learning to recognize intent. We use different neural net models to determine the intent and whether to show a suggestion. We also use entity extractors like Duckling to identify things like dates, times, and locations. For some suggestions, we use additional neural net models to rank different suggestions to ensure the most relevant options are surfaced (like translation, stickers, or replies). M also learns and becomes more personalized over time, so if you interact with one suggestion more regularity, M might surface it more. If you use another less (or not at all), M will adapt accordingly. This entire process is automated and powered by artificial intelligence.

Video walkthrough of the buyer experience on Marketplace.

Expanding on the AI foundation

Armed with a better product index, a faster retrieval system, and the ability to understand context and relevance, Marketplace is able to serve both buyers and sellers in a more seamless way. But this is not the end of the road.

We are currently testing a new feature that allows buyers to take a photo of something out in the real world, such as headphones similar to those you saw in a store or a bag your friend is carrying, and search for visually similar products on Marketplace. Eventually, our computer vision platform could potentially be used to recommend products that would go well with an item that was just viewed — for example, recommending an end table that goes well with a sofa. AI may not perfectly understand nuanced style preference, but with enough context and sufficient contextual and visual understanding, it could help point us in the right direction.

Visit Facebook.com/Marketplace to see all of these features in action.