The Facebook apps for Android and iOS are used by billions of people across the world. We have ambitious goals around delivering a delightful experience for people using Facebook and a strong belief that responsiveness and smoothness are keystones of a high-quality product experience. Together, these mean that, among other things, we need to quickly and efficiently investigate performance problems.

Monitoring

Every engineering team at Facebook is encouraged to monitor app performance using common tools and frameworks within our family of apps. In practice, this means that with a few lines of code we can collect telemetry information from a new feature, along with centralized reporting, data pipeline management, and tools to work with aggregate data.

The ability to monitor for regressions and explore changes over time has been an important part of our mobile performance efforts. When we first developed tools that allowed us to view performance changes over time, we discovered many low-hanging opportunities: cases where we gathered new insight on how our app behaved in practice, as well as other “easy” issues. But it also showed us how much we didn’t know about performance tracking; everything that wasn’t immediately obvious was incredibly hard to investigate. We often knew the overall metric regressed, but we couldn’t pinpoint why.

Further, the variation of performance conditions and system behaviors we find “out in the wild” of real-world deployment made app performance investigations one of our most difficult and time-consuming engineering challenges.

Early on, regression management followed a simple workflow. When we discovered a regression in our beta or production releases, we would immediately look for configuration or experimentation changes that isolated it. However, not all regressions were easily addressed with server-driven configuration. We would then dive deep into our monitoring telemetry. Unfortunately, it was often insufficient to diagnose these harder issues as we only collected durations alongside a few “proxy metrics” (e.g. CPU time). This usually left us with only one possible path — to reproduce the regression locally, with profilers (Systrace and other local tools) and isolate the root cause.

This last step is where a lot of investigations stalled, particularly when we found outlier regressions where only a small number of devices were experiencing a severe slowdown. These cases couldn’t be easily replicated in our mobile device lab, which was designed to replicate more mainstream experiences. This inability to reproduce the state of the device and the system locally led to long turnaround times and an exhausting search through a stream of code and configuration changes.

We soon realized that in order to increase our ability to diagnose issues and find opportunities for performance improvements, we needed to build a dedicated tool that could better gather and analyze much more detailed telemetry from the app as these slower interactions took place.

Profilo

The tool we ultimately built is called Profilo. Profilo is a high-throughput, mobile-first performance tracing library. Today we’re happy to announce the open source release of Profilo, starting with the Android library. We hope that by sharing our methodology and tools, we can enable more mobile engineers to work on production performance traces effectively and together build better workflows and analysis systems.

Profilo gives us a foundation to efficiently manage multiple streams of data coming from different sources measuring different aspects of the app, so that we can reconstruct important parts of the state of the app and the system during any run of an interaction being measured. The focus on high throughput has allowed us to collect telemetry at rates of 3000 events per second or more, with minimal disruption or distortion of the underlying interaction.

It also provides us with a powerful configuration system we use to enable telemetry collection from specific devices, without overburdening the apps of all users. By allowing teams to change trace configuration remotely, we have enabled key workflows such as increasing sampling rates for suspicious A/B tests on demand, enabling higher-overhead data providers when debugging regressions, and overall fine-tuning of trace volume and contents, as our needs evolve.

Profilo vastly improves the turnaround time on performance regressions by giving us the precision to understand the root cause for regressions as small as tens of milliseconds of CPU time. By collecting rich streams of telemetry, it also enables new types of causality analyses, as well as a much more precise understanding of metrics such as “scroll fluidity” and “app responsiveness.”

Before Profilo, our cold app startup was a very difficult metric to understand and maintain. While we had a pretty good idea of how long different subsections within it took (along with a solid set of secondary, related metrics), regressions took a long time to root-cause and triage and often remained unsolved for months. With the rich telemetry from traces, we are able to build tooling to aggregate and compare CPU stack traces from release to release, making root-cause analysis of that particular type of regression something that takes less than an hour as opposed to days. Other telemetry streams allow us to tackle different types of common regressions such as more code being loaded or slow paths becoming more frequent.

One of the unique features of Profilo is its custom Java stack unwinder. We believe this is a first in Android performance libraries in that it understands internal VM structures and can collect stack traces without using the official Java APIs, thus overcoming many of the well-documented issues with suspension-based stack unwinding (see Evaluating the Accuracy of Java Profilers for past research on this topic). By implementing unwinding within Profilo itself, we sacrifice compatibility but also gain the ability to create a much more efficient stack trace representation that does not use class or function names within the trace data itself. We also gain the ability to collect true CPU usage stack traces (that is, stack traces taken with regard to the “CPU time” clock and not the normal wall time clock).

In order to get a better understanding of what the virtual machine and Android frameworks do on our behalf, we also developed ways to capture “systrace” telemetry (or more precisely, our apps’ “atrace” usage) in production. This allows to us investigate garbage collection and other VM-specific events and their impact on our apps. For example, we’ve been able to determine causes of unintentional I/O operations on the UI thread, rare lock contention in critical pieces of the application, as well as tackle app smoothness issues by identifying the exact boundaries of a frame and analyzing stack traces and other instrumentation on a per-frame basis.

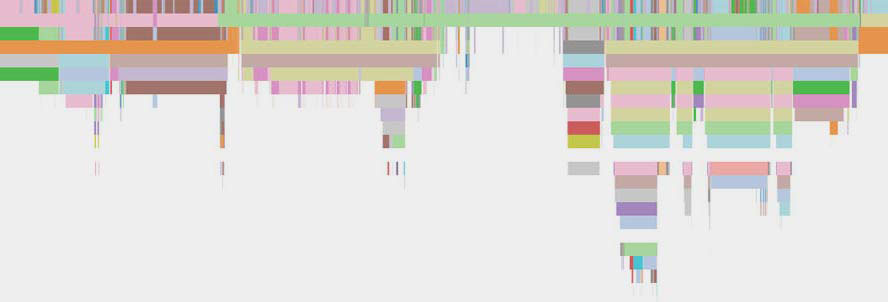

In the image above we can see aggregate changes in the types of garbage collection performed during an interaction. The change is mostly in frequency, not duration, but on average results in a ~15ms shift from “partial” to “sticky” collection.

These and other telemetry streams have changed how we think about regression detection and performance analysis for our mobile apps. We’ve created in-depth telemetry to explain a large chunk of our resource usage (CPU time), gained a much deeper understanding of our relationship with the VM, and enabled new, more intricate analyses of hard performance metrics like “app responsiveness.”

As part of the open source release, we’re also releasing basic tooling to work with traces and some example analyses to give you a feel for the workflow. We’re very excited to see where we can take these ideas and tools with the help of the community and make mobile performance a much more tractable problem.