Building infrastructure that can easily recover from outages, particularly outages involving adjacent infrastructure, too often becomes a murky exploration of nuanced fate-sharing between systems. Untangling dependencies and uncovering side effects of unavailability has historically been time-consuming work.

A lack of great tooling built for this, and the rarity of infrastructure outages, makes reasoning about them difficult. How far will the outage extend? And which available mitigations can get things back online? Bootstrapping blockers and circular dependencies present real concerns we cannot put to bed with theory or design documents alone. The lower in the stack we go, the broader the impact of such outages could be. Purpose-built tooling is important for safely experimenting with relationships between systems low in the stack.

BellJar is a new framework we’ve developed at Meta to strip away the mystery and subtlety that has plagued efforts to build recoverable infrastructure. It gives us a simple way to examine how layers in our stack recover from the worst outages imaginable. BellJar has become a powerful, flexible tool we can use to prove that our infrastructure code works the way we expect it to. None of us wants to exercise the recovery strategies, worst-case constrained behaviors, or emergency tooling that BellJar helps us curate. At the same time, careful preparation like this has helped us make our systems more resilient.

Pragmatic coupling between systems: curation over isolation

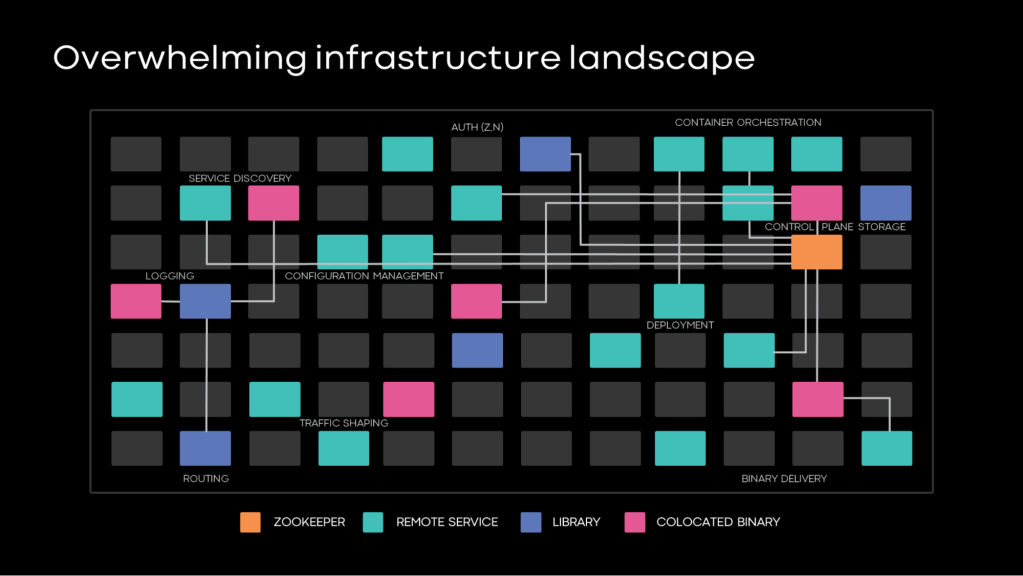

With an infrastructure at Meta’s scale, where software may span over dozens of data centers and millions of machines, tasks that would be trivial on a small number of servers (such as changing a configuration value) simply cannot be performed manually. The layers of infrastructure we build abstract away many of the difficult parts of running software at scale so that application developers can focus on their system’s unique offerings. And reusing this common infrastructure solves these problems once, universally, for all our service owners.

However, the reach of these foundational systems also means that outages could potentially impact a wide swath of other software. Herein lies the trade-off between dependency and easy disaster recovery that infra engineers have to consider. The thinking goes that if you build code that doesn’t need any external systems, then recovery from all sorts of failures should be quick.

But with time and growth, the practicalities of large-scale production environments bear down on this simplistic model. All this infrastructure amounts to regular software. Like the services it powers, it needs to run somewhere, receive upgrades, scale up or down, expose configuration knobs, store durable state, and communicate with other services. Rebuilding each of these capabilities to ensure perfect isolation for each infrastructure system gets impractical. In many cases, leaning on shared infrastructure to solve these problems rather than reinventing the wheel makes sense. This is especially true at scale, where edge cases can be difficult to get right. We don’t want every infrastructure team to build their own entire support stack from scratch.

Yet without a solid understanding of how our systems connect, we can find ourselves in one of two traps: building duplicative support systems in pursuit of overkill decoupling, or allowing our systems to become intertwined in ways that jeopardize their resiliency. Without good visibility and rigor, systems can experience a mix of both as engineers address the low-criticality dependencies they know about. This can create undetected, and therefore unaddressed, circular dependencies.

Without structured, rigorous controls in place, a mishmash of uncurated relationships between systems tends to grow unchecked. This then creates coupling between systems and complexity that can prove difficult to reverse.

Recoverability as the design constraint

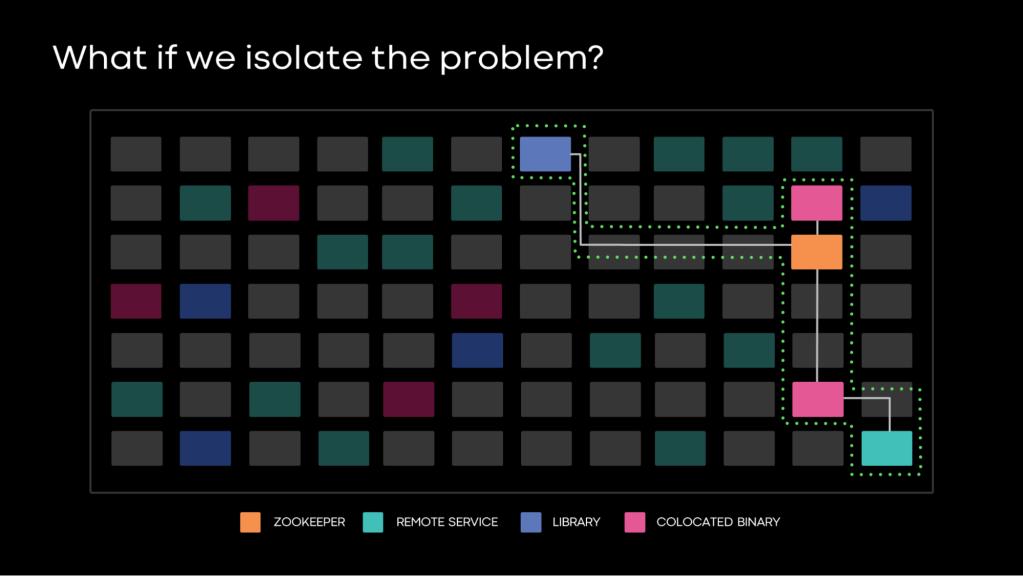

So how do we avoid senseless duplication while limiting the coupling between systems that can present real operational problems? By focusing on a clear constraint — recoverability, the requirement that whenever outages strike our infrastructure systems, we have strong confidence that our infrastructure can return to a healthy state in a short amount of time.

But to analyze cross-system relationships through the lens of recoverability, we need tooling that exposes, tests, and catalogs the relationships buried in our millions of lines of code. BellJar is a framework for exercising infrastructure code under worst-case conditions to uncover the relationships that matter during recovery.

Outages can manifest in many forms. For our purposes, we like to focus on a broad, prototypical outage that presents a categorical failure of common infrastructure systems. Using recoverability as the objective allows us to bring tractability to the problem of curated coupling in several ways:

- Practicality: Recoverability provides an alternative to the impractical, absolutist position that requires complete isolation between all infra systems. If some coupling between two systems does not interfere with recovery capabilities of our infrastructure, such coupling may be acceptable.

- Characterization: Recoverability lets us focus on a specific type of intersystem relationship — the relationships required to get a system up to minimally good health. Doing so shrinks the universe of cross-system connections that we need to reason about.

- Scope: Rather than attempt to optimize the dependency graph for our entire infrastructure at once, we can zoom in on individual systems. By considering only their adjacent supporting software, these become the building blocks of recovery.

- Additive: A common approach to decoupling systems asks, “What happens to system Foo if we take away access to system Bar?” However, when your production environment contains thousands of possible dependencies, even enumerating all such test permutations can become impossible. By contrast, a recovery analysis asks the inverse question: “What is everything Foo needs to reach a minimally healthy state?”

- Testability: Recovery scenarios offer real, verifiable conditions we can assert programmatically. While outages manifest in many shapes and sizes, in each case, recovery is a Boolean result — either recovery is successful or it isn’t.

Examining system recoveries with a total outage in a vacuum

BellJar presents infra teams with an experimentation environment that is indistinguishable from production, with one big difference. It exists vacuum-sealed away from all production systems by default. Such an environment renders the remote systems and local processes that satisfy even basic functionality (configuration, service discovery, request routing, database access, key/value storage systems) unavailable. In this way, the environment presents hardware in a state we’d find during a total outage of Meta infrastructure. Everything but basic operating system functionality is broken.

To produce this environment, we lean heavily on our virtualization infrastructure and fault injection systems. Each instance of a BellJar environment is ephemeral, composed of a set of virtual machines (VMs) that constantly rotate through a lease-wipe-update cycle. Before we hand the machines to a BellJar user, we inject an enormously disruptive blanket of faults that blackhole traffic over all network interfaces, including loopback. Our fault injection engine affords us allowlist-style disruptions we can stack against VMs. They disrupt our systems at the IP layer, at the application’s network layer, or on a per-process or container basis.

BellJar’s API gives operators the ability to customize which production capabilities they want to leak back into this vacuum through an additive allowlisting scheme. Need DNS? We’ll allow it. Need access to a specific storage cluster? Just tell BellJar the name of the cluster. Want access to the read-only pathway for a certain binary package backend? You got it.

Through granular, checkbox-style allowlisting, the operator can customize the limited set of remote endpoints, local daemons, and libraries they want to earmark as “healthy.” The resulting environment presents a severe outage scenario per the operator’s choosing. But within the environment, the operator can describe and test their own system’s minimally viable recovery requirements and startup procedure, from empty hardware to healthy recovery.

Making each recovery from a unique recipe

This approach reveals several unique ingredients in each BellJar test. Often, teams will define a set of different recovery scenarios for a single piece of infrastructure. Each describes its own combination of the following:

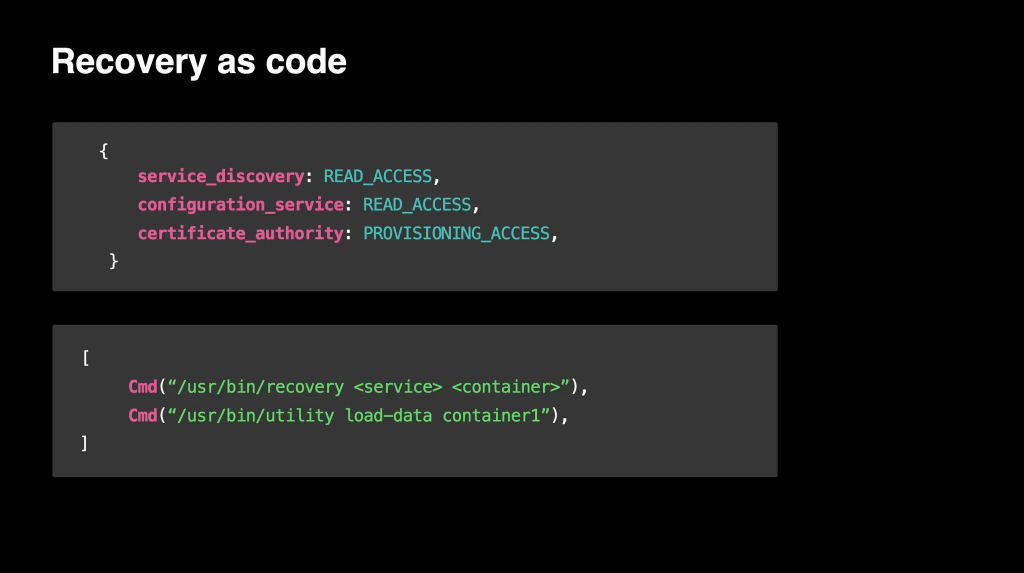

- Service under test: This might be a container scheduler service, a fleetwide config distribution system, a low-dependency coordination back end, a certificate authority, or any number of other infrastructure components we wish to inspect. Most, but not all, of these services run in containers.

- Hardware: Different systems run on different hardware setups. We compose the test environment accordingly.

- Recovery strategy: Each system has a recovery runbook that its operators expect to follow to bring up the system from scratch on fresh hardware.

- Validation criteria: The criteria that indicates whether a recovery strategy has produced a healthy system.

- Tooling: The steps of those recovery runbooks likely involve system-specific tooling that operators will reach for during outage mitigation.

- Recovery conditions: Most systems require something within our infrastructure to be healthy before they can recover. An allowlist captures this set of assumptions, enumerating each production capability the recovery environment must provide before we can expect it to succeed.

All these ingredients can be assembled to define a single BellJar test, which the service owner writes with a few lines of Python.

Code conquers lore

Since we can define each of the above inputs programmatically, we can code a fully repeatable test that distills all the assertions a service’s operators want to prove about its ability to recover from failure.

Prior to BellJar, we’ve had to scour wiki documentation, interview a team’s seasoned engineers, and spelunk through commit histories — all to understand whether System A or System B needed to be recovered first if both were brought offline. Exposing recovery-blocking cyclic dependencies often relied on mining through a team’s tribal knowledge, outdated design documents, or code in multiple programming languages.

Now BellJar tests the operators’ assumptions, with a Boolean result. True means the service can be recovered with the runbook and tooling you defined, under any outage in which at least the test’s recovery conditions are met. And we can deploy a service’s BellJar test in its CI/CD pipeline to assert recoverability with every new release candidate before it reaches production.

For the individual service owner, the ability to generate a codified, repeatable recovery test opens doors to two exciting prospects:

- Move faster more safely: If you’ve ever been reluctant to change an arcane setting, retry policy, or failover mechanism, it may have been because you’re unsure how it impacts your disaster recovery posture. Unsure whether you can safely use a library’s new feature without jeopardizing your service’s “low dependency” status? BellJar makes changes like these a no-brainer. Just make the change and post the diff. The automated BellJar test will confirm whether your recovery assertions still hold.

- Actively reduce coupling: Many service owners discover surprising dependencies they didn’t know existed when they onboard to BellJar, so we’ve learned to onboard new systems gradually. First, the service owner defines their recovery strategy in an environment with all production capabilities available. Then we gradually pare down the recovery conditions allowlist to the bare minimum set of dependencies required for successful results. Through an iterative approach like this, teams uncover cross-system dependency liabilities. They then work to iteratively eliminate those that pose circular bootstrapping risks.

Multisystem knock-on effects

Wide coverage reveals cross-system details that were previously hard to understand. As a result, the benefits of the BellJar system multiply when we have many infrastructure service owners using it to explore their recovery requirements.

The recovery graph

BellJar allows teams to focus on the first-hop requirements their services demand for a successful recovery. In doing so, we intentionally do not expect each service owner to understand the full depth of Meta’s entire dependency graph. However, limiting service owners to first-hop dependencies means BellJar alone does not provide them with the full picture needed to find transitive dependency cycles in our stack.

Now that we’re onboarding a broad collection of services, we can begin to assemble a large, multi-system recovery graph from the individual puzzle pieces provided by each service owner’s efforts. We provide the same prompt to each service: “What does it take to bring your service online on an empty set of hardware?” By doing so, BellJar helps us generate the composable building blocks we need to tie together recovery requirements of services separated by organizational or time zone barriers. Security, networking, database, storage, and other foundational services express their portions of our recovery graph as code that lends itself to programmatic analysis. We’re finding that this recovery-specific graph does not match the runtime relationships we uncover through distributed tracing alone.

Tests as documentation

Service owners describe their recovery strategy (the runbook used to perform the service’s recovery) as the set of steps a human would need to invoke during disaster recovery. This means a test includes the shell commands an operator will invoke to bootstrap things across otherwise empty machines. Typically, these commands reach for powerful toolkits or automation controls.

Codifying a human-oriented recovery runbook inside the BellJar test framework may seem strange at first. But human intervention is typically the first step in addressing the disasters we’re planning for. By capturing this runbook for each system, whether it’s a single command or an exceptionally complex series of steps, we can automatically generate the human-friendly documentation operators typically have had to manually maintain on wikis or offline reference manuals.

Every time a service’s BellJar test passes, the corresponding recovery documentation gets regenerated in human-friendly HTML. This eliminates the staleness and toil we tend to associate with traditional runbooks.

Federated recovery and healthy ownership

Consider a foundation composed of scores of infrastructure systems. It is not scalable to assume we can task a single dedicated team, even a large one, with broadly solving all our worst-case recovery needs. At Meta, we do have a number of teams working on cross-cutting reliability efforts, protecting us against RPC overload, motivating safe deployment in light of unexpected capacity loss, and equipping teams with powerful fault injection tools. We also have domain experts focused on multisystem recoveries of portions of our stack, such as intra-data center networking or container management.

However, we’d best avoid a model in which service owners feel they can offload the complexities of disaster recovery to an external team as “someone else’s problem.” The projects mentioned above achieve success not because they absolve service owners of this responsibility, but because they help service owners better solve specific aspects of disaster readiness.

BellJar takes this model a step further by federating service recovery validation out to service owners’ development pipelines. Rather than outsource to some separate team, the service owner designs their recovery strategy. They then test this strategy. And they receive the failing test signal when their strategy gets invalidated.

By moving recovery into the service owner’s release cycle, BellJar motivates developers to consider how their system can recover simply and reliably.

Common tooling

Until recently, service owners who have been especially interested in improving their disaster readiness have found themselves building a lot of bespoke recovery tooling. Without a standard approach that’s shared by many teams, the typical service owner discovers and patches gaps in recovery tooling on an ad-hoc basis. Need a way to spin up a container in a pinch? A way to distribute failsafe routing information? Tools to retrieve binaries from safe storage? We’ve found teams reinventing similar tools in silos because of the relative difficulty in discovering common recovery needs across team boundaries.

By exposing recovery runbooks in a universal format that can be collected in a single place, BellJar is helping us easily identify the common barriers that teams need standard solutions for. The team building BellJar has consulted with dozens of service owners. As a result, we’ve helped produce standard solutions like:

- A common toolkit for constructing low-level containers in the absence of a healthy container orchestrator.

- Fleetwide support for emergency binary distribution that bypasses most of our common packaging infrastructure.

- Secure access control over emergency tooling that cooperates with service owners’ existing ACLs.

- Centralized collection and monitoring of the inputs (metadata and container specifications) needed for recovery tooling.

By building well-tested, shared solutions for these common problems, we can ensure that those tools get first class treatment under a dedicated team’s ownership.

Common patterns across systems

Thanks to BellJar’s uniquely broad view of our infrastructure, we’ve also been able to distill some pretty interesting patterns that teams have had to grapple with when designing for recovery.

The Public Key Infrastructure foundation

Access controls and TLS define every action that both human operators and automated systems can and cannot take. Security becomes important during disaster recovery, just as in a steady state. This means that systems like certificate authorities are important to any recovery strategy. In an environment where every container requires an x.509 certificate from a trusted issuer, removing dependency cycles for containerized security services becomes particularly hard. Security systems demand early investment to unlock robust recovery strategies elsewhere in the stack.

DNS seeps into everything

Even when you use a custom service discovery system, DNS permeates tooling everywhere. Due to its ubiquity, engineers often forget DNS is a network-accessed system. Because it is so robust, they treat it more like a local resource akin to the filesystem. Learning to view it as the fallible, remote system it is requires rigor and constant recalibration. Strict testing frameworks really help with this, especially in an environment of IPv6, where 128-bit addresses aren’t easy to remember or write on a sticky note.

For select systems that power discovery, we’ve moved to relying on routing protocols to stabilize well-known IP addresses. Across our vast collection of tooling, we’re retrofitting much of it with the ability to accept IP addresses in a pinch.

Caching obscures RPCs

Years of work have made our systems very difficult to fully break, hardening them with caching and fallbacks that balance trade-offs in freshness vs. availability. While this is great for reliability, this gentle failover behavior makes it challenging to predict how worst-case failure modes will eventually manifest. The first time that service owners see what production would be like without any configuration or service discovery caching, for instance, often occurs in a BellJar environment. For this reason, the team that builds BellJar spends a lot of time ensuring that we’re actually breaking things inside our test environments in the worst way possible.

Even configuration needs configuration

At our scale, everything is configuration-as-code, including the feature knobs and emergency killswitches we deploy for every layer in the stack. We deploy RPC protocol library features and control security ACLs via configuration. Hardware package installations get defined as configuration. Even our configuration distribution systems behave according to configuration settings. The same applies to emergency CLI tooling and fleetwide daemons. When all this configuration is authored and distributed via a common system, it’s worth your time to design robust mechanisms for emergency delivery, automatic fallback to prepackaged values. Additionally, you need to design a diverse set of contingency plans for whenever the configuration that powers your configuration system is broken.

Libraries demand allowlist-style validation

At the pace we move, even low-level services lean on libraries that constantly change in ways no single engineering team can scrutinize line by line. Those libraries can be a vector for unexpected dependency graph creep. With enormous codebases and thousands of services, relying on traditional blocklist-style fault injection to understand how your system interacts with production can be a game of whack-a-mole. In other words, you don’t know what to test if you can’t even enumerate all the services in production.

We’ve found unwanted dependencies inserted into our lowest-level systems thanks to simple drop-in Python2 -> Python3 upgrades, which have made recovery tooling dependent on esoteric services. Authorization checks have introduced hard dependencies between emergency utilities and our web front end. Ubiquitous logging and tracing libraries have found themselves unexpectedly promoted to SIGABRT-ing blockers to our container tooling. Thanks to allowlist-style tests like those provided by BellJar, we can automatically detect new dependencies on systems we didn’t even know existed.

Asking new questions

We’re still being surprised by what our services can learn through BellJar’s tightly constrained testing environment. And we’re expanding the set of use cases for BellJar.

Now, beyond its initial function for verifying the recovery strategies for our services, we also use BellJar to zoom in on other important pieces of code that don’t look like containers or network services at all.

Common libraries like our internal RocksDB build and distributed tracing framework now use BellJar to assert that they can gracefully initialize and satisfy requests in environments where none of Meta’s upstream systems are online. Tests like these protect against dependency creep that could find these libraries blocking recoveries for scores of infra systems.

We’ve also expanded BellJar to vet the sidecar binaries we run on millions of machines to support basic data distribution, host management, debugging, and traffic shaping. With extremely restricted allowlists, we continually evaluate new daemon builds. Each version must demonstrate it can auto-recover from failure, even when the upstream control planes they typically listen to go dark.